"...the core of human (intellectual, cognitive) nature is a computational system which probably has something like the properties of a snowflake"

- Noam Chomsky (2014) serious-science.org/language-design-679

PREFACE

For help with setting up this research project, I would like to thank Professor Richard Clark (Psychology Head of School*), Professor Leon Lack (Psychology of Sleep*), and Professor Marcello Costa (Head of Neuroscience Dept. , Flinders Medical Center Teaching Hospital*). Other people who assisted me during the project were Professor David Powers, Professor Paul Calder* and Dr. Julie Mattiske.

This research covers the domain popularised by Daniel Dennett in his best-seller, 'Consciousness Explained'. This research also solves some of the problems eloquentlydescribed by Powers & Turk in their 1989 book, 'Machine Learning of Natural Language' published by Springer-Verlag. Finally, many thanks are due to Professors Noam Chomsky and Bernard Baars for acknowledging my work and providing feedback on those aspects of my research they found interesting. If you helped me with my work your name is not here, I unreservedly apologise for the oversight. Please email me at m.c.dyer@icloud.com if you have any inquiries.

* Across the period of this project, all of these people have transitioned from full-time academia to various states of semi-retirement

ON-LINE PUBLICATIONS

tde-r.webs.com

contains essential material not covered elsewhere in the ai-fu site.

The TDE represents a recursive explanation of a brain's activities in the same way that

a Turing Machine (TM) represents a recursive explanation of a computer's activities. The TDE is the neuroanatomical implementation of a basic cybernetic (control systems) template

which I have named the 'heterodyne'. The heterodyne is essentially a homeostat in which a feedforward, predictive time-based step has been added to the existing feedback, corrective spatial goal-seeking functionality.

chi-cog

This website demonstrates Work-in-Progress, a 'thinking out aloud'in disjointed, personalised snapshots of

concepts that will prove important to the final draft of GOLEM theory

cybercognition

This incomplete website contains further distillation and refinement of the concepts needed to create the monolithic GOLEM (previously called CCE) vision, including a description of the first of two 'aha' (also known as a 'Eureka')

insights about the equivalence of minds and computers (ie structural similarity of natural language context dependency and OS shell script with user prompt).

golemma

Introduces the second of two important insights about Natural Language (NL), the separation of Constituency

from Dependency. In a series of hotly debated emails during 2014-15, this author argued his position with famous linguist Noam Chomsky

ending in something of a stalemate. Chomsky has been

criticised by others, as well as by this author, for adopting a stance which focusses excessively on grammar (syntax) to the exclusion of meaning (semantics).

My main wish is that Professor Chomsky leaves politics to the chumps (the Trumps), and reconnects with his first love, cognitive linguistics.

DOCUMENT STRUCTURE

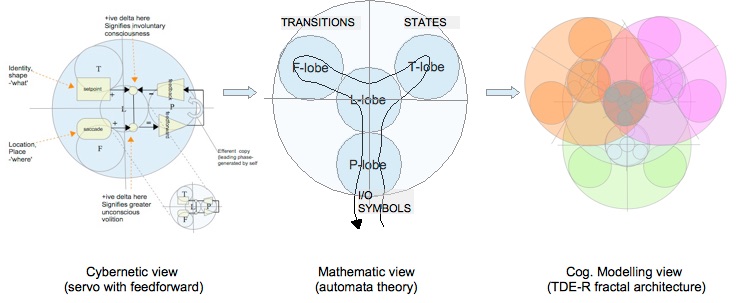

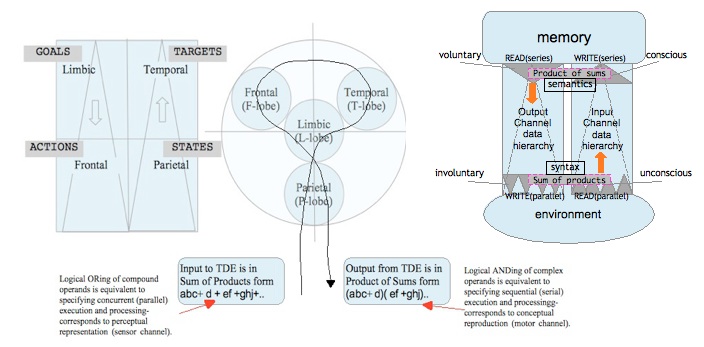

The discussion in Part 1 ( 'SOLUTION') introduces the TDE, a fractal pattern that governs all aspects of cognition. From the TDE, both in its canonical form, with its three key fractal projections (see Figure 0.1) and its memory-mapped form, as the GOLEM (see Figure 0.2), a complete and coherent theory of brain, mind and self emerges. This theory is evolution-friendly, based as it is on a simple pattern which hardly changes from simple arthropod to advanced vertebrate. Furthermore, a cybernetic analysis of the TDE yields a two-factor model of subjectivity which combines conventional consciousness with conative (ie tropic or drive-based) volition. This model of subjectivity resolves Libet's Paradox in a much more satisfying way than all previous attempts. Most significantly, level 3 of the TDE fractal represents a functionally and anatomically correct model of human language without linguistics being explicitly addressed during model development. This kind of extrapolation is the gold standard in complex model construction, and strongly suggests that the TDE is an essential part of the human cognitive mechanism (biological intelligence BI). The TDE is precisely the kind of 'unified theory of cognition' which AI pioneer Allen Newell hoped to present in his opus[75]. In this work, he advises cognitive science to 'turn its attention to developing theories of human cognition which cover the full range of human perceptual, cognitive and action phenomena'. Unfortunately, he does not seem to follow his own excellent advice, opting instead for the necessarily narrow vision of AI based upon procedural programming of so-called 'production systems'. Lest we judge him too harshly, it is important to be mindful of the relative paucity of expertise in true machine intelligence that existed until very recently. When Newell started his research, all AI problems looked like nails (ie search trees). We surely cannot blame the fellow for building a truly grand hammer (SOAR, his 'cog'). Nevertheless, his core idea (the central vision of his 'call to arms' if you will) that the 'cogs' we build deliberately cover the full range of human cognitive activities, is above all the one that has most inspired the design of the TDE/GOLEM.

While Part 1 of this website presents a detailed, concrete Strong AI 'cog', based on sound, thoroughly researched BI (biological intelligence) principles, I explore the basis of its credibility in more detail in Part 2 ('CREDIBILITY'). The purpose of part 2 is to raise reasonable doubt about current approaches, clearly pointing out where previous science is patently or probably wrong, and, if possible, precisely why.

Finally, in Part 3 ('IMPLEMENTATION') , I outline the steps necessary to implement a TDE-R/GOLEM using conventional computational systems.

In this third and final section, I engineer an in-principle design of nothing less than a conscious, emotional machine, with thoughts (phenomenology) and subjective responses very much like our own, although not nearly as complex! This design describes a human-equivalent terrestrial intelligence, capable of levels of language use and introspection that are equivalent to our own. The TDE/GOLEM will be an individual software-equivalent self with an internal mental 'life'. It will be able to construct an internal self-narrative which will have many of the features of true human introspection. If it is allowed to develop as a human infant develops, there is no a priori reason why it should not be able to truly pass the Total Turing Test.

ABSTRACT

In these pages-

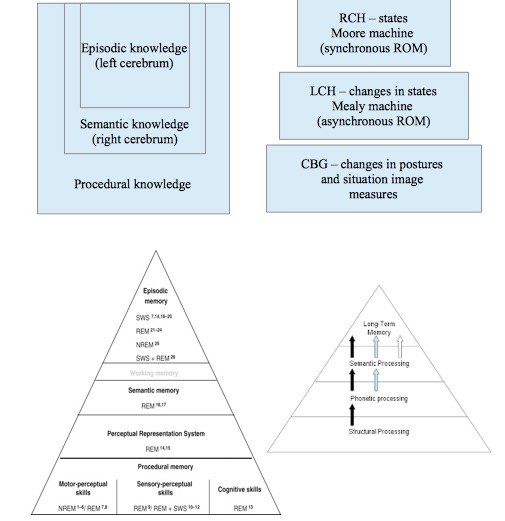

1) FRACTAL - Informed by direct observation of CNS anatomy, and inspired by Mandelbrot's fractals, I demonstrate my success at reverse engineering human cognition at all levels. My discovery is the TDE (Trifractal Differential Engine), a tri-level fractal differential state machine (DSM) with subjective semantics. The model is supported by Tulving's CNS knowledge architectonics (ie episodic / semantic vs declarative/ procedural knowledge subtyping). I believe that I have discovered 'Chomsky's Snowflake', the fractal pattern which underpins all biological intelligence.

(2) SEMANTIC - I demonstrate my success at reverse engineering vertebrate cognition as a semantic engine; ie a set of hierarchical knowledge codes (semantics) operated upon by sequential computational processes (syntax). I have heeded the warning issued by John Searle in his famous gedankenexperiment, The Chinese Room.

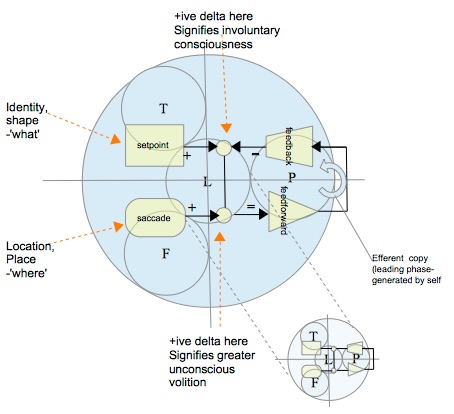

(3) SUBJECTIVE EMOTIONALITY - I introduce the ipsiliminal dyad (ID), or to give it its more long-winded name, the subjective orthogonal emotionality dyad (a two-component measure which is NOT a vector)- this is what drives us, what gives our thoughts agency, and infuses our behaviour with meaning and passion, planning and cunning. The ID acts at all three TDE levels. The TDE operates cybernetically (ie using drive state differentials) within an orthogonal subjective (volition x conscious) space. When its combined effects over the 3 TDE levels interact with the endocrine system, the result is what we call 'emotions'- our individual predictive assessment of, and reactive judgement of each situation encountered or imagined.

(4) LINGUISTIC - The TDE is a fractal pattern which uniquely determines the architectonics of our cognitive processes. When investigated intensively, as a disembodied canonical pattern, it reveals the two-component subjective emotionality vector. When investigated extensively it reveals the universal linguistic nature of all cognitive computations, especially those at TDE level 3, which correspond to all of the types of human language use.

(5) EMBODIED - I demonstrate that the solution I present is an embodied one, using the word in the same sense as, for example, Brooks [40]. I demonstrate that TDE1 level implements embodied computation, that TDE2 level implements embedded situational computation, and that TDE3 level implements linguistic computation (Chomsky/Montague i-language)

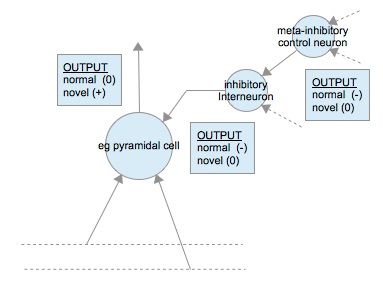

(6) CIRCUIT-LEVEL NEUROADAPTATION (NOT SYNAPTIC PLASTICITY) I demonstrate a more viable and biologically plausible neuroadaptation based on Warren McCulloch's original semantic network design, NOT the same as the MCP neuron. This research shows that our brain does not (indeed, from timing considerations, cannot) rely on synaptic changes alone. Characteristic waking and sleeping EEG traces from each neural layer are produced by 'ganged' inhibitory interneurons, controlled by ascending and descending neural reticular systems, which use saccadic mechanisms to open and close temporal input sample windows. That is, these semantic networks exhibit neuroadaption based on changes in circuit state, NOT changes in synaptic state.

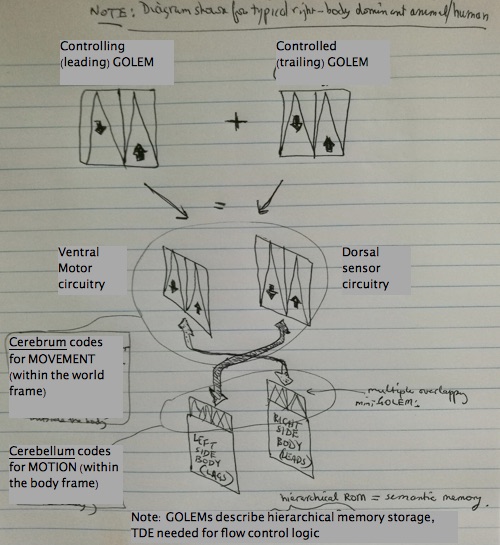

(7) MEMORY STATE PERSISTENT LATCH CIRCUITS I demonstrate that memory state in GOLEM is stored in neural loops which are kept high or low by meta-inhibitory latch circuits, each one consisting of a pair of inhibitory auxiliary interneurons connected back-to-back.

(8) PYRAMIDAL CIRCUITS IN CEREBRUM AND CEREBELLUM ENCODE SPACE AND TIME I demonstrate the key roles that pyramidal place and timing circuits in cerebrum and cerebellum play in behaviour planning. These two systems interact to implement embedded situational computation (equivalent to Powers' PCT).

(9) SLEEP/WAKE PHENOMENOLOGY PART OF NEUROADAPTATION MECHANISM I also suggest the sleep and waking form the foundation of a global learning methodology- what has now become known as 'neuroplasticity'. This model is fully supported by sleep and EEG research data. The dyadic emotional cybernetics of the TDE model (see item (3) above) gives rise to four hybrid subjective states which represent dynamic attractors in typical learning process trajectories.

(10) COMPLETES UNDERSTANDING OF LANGUAGE- WHAT IS IT, WHY IS IT I demonstrate how language works as an intersubjective computation at the third TDE level (TDE3). I show that linguists have failed to understand that, at the theoretical stage, sensor/input channel information behaviour must be analysed separately from that in the motor/output channel. I document the destructive and deleterious effect that this seemingly insignificant error has had on our attempts to construct a linguistic theory that is an integral constituent of general cognition ('the language wars').

INTRODUCTION AND OVERVIEW

Filling the gap between medical science below and computer science above

In spite of their explicit verbal protestations and ad hoc theoretical speculations, most modern, educated people believe implicitly in the enterprise of modern medicine and its (rationalist, materialist, monist) scientific underpinnings. They obey the psychiatrists professional advice, take their meds and smile, subconsciously secure in the knowledge that modern psychopharmacological intervention works.

So...the brain is a machine! Its all good, then. We should therefore be ready to mobilise our collectively encyclopaedic knowledge about machines, and mechanistic behaviour, and proceed to apply it to the 'wetware', those inner realms which the sciences of neuroscience and psychology carved up between them over a century ago. All we need to do is separate these historical players, and create a thematic, empirical and ontological gap big enough to allow the insertion of a relatively new third party, theoretical computer science, a.k.a. Artificial Intelligence (AI).

Not so fast! Before we can blithely press the button and go, we must make some long overdue changes ('repairs') to both disciplines. (A) We need to change Psychology from an apologetic discipline that is envious of the objective foundations of the so-called 'hard' sciences, to a millennial pioneer, the first science to be manifestly based on 'soft' subjective principles, proud of a history rooted in the Victorian-era phenomenological traditions that once formed the orthodoxy on both sides of the Atlantic. (B) We must encourage computer science to re-incorporate cybernetics into a new hybrid post-digital superdiscipline [76] whose institutional and historical DNA are capable of reinvigorating theoretical cognitive science.

Finite State Machine/ Cybernetic Hybrid

The reverse engineering of the human CNS knowledge sub-type hierarchy, as originally undertaken by Endel Tulving[1], was used as the research starting point. Endel Tulving's analysis of CNS anatomy reveals a distribution of knowledge sub-types which fits the four major lobes of the brain. These are also the four lobe types which constitute the TDE fractal. The exact role of the occipital lobe in TDE theory was not revealed until later. Originally, it was regarded as part of the TDE's P- lobe (an abstraction of the parietal lobes). Evolution seems to have used a basic four-lobed fractiform pattern, ie one which has been recursively generated at multiple size scales during neural development, as a template to build the human brain. The local (to each level, that is) template pattern I named the Tricyclic Differential Engine(TDE). The reasons why I invented this acronym now seem rather historical and irrelevant, but like any name, after several years of use, it became too late to change it. From 'TDE' I coined the derived term TDE-R to represent the TDE in its global recursive form. That is, the TDE pattern is a fractal[56][57] which forms the recursive 'kernel' or generatrix of the TDE-R. The architectonics[74] of the TDE-R closely represents the neuroanatomical variation in knowledge sub-types and memory class in Tulving's model.

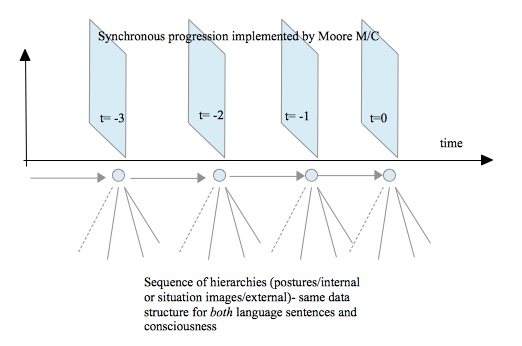

The TDE is a completely new type of finite state automaton based upon cybernetic concepts. That is, although the TDE is a finite-state automaton, in a very similar way to a Turing Machine, it has been modified to include drive states, or differentials. Further analysis reveals that the TDE is built up from two well-known FSM sub-types, the synchronous Moore machine and the asynchronous Mealy machine. This is a somewhat surprising finding, since Moore and Mealy ROM's are not considered as natural phenomena, but are presumed to be wholly artificial concepts invented by, and for, the highly specialised world of digital electronics engineering. It came as very welcome news indeed for the few remaining die-hards who firmly maintain a belief that the brain is a type of computer, not fundamentally different from the one I use to type these words. Later, I demonstrate this by application of TDE/GOLEM theory. The TDE-R exemplifies both embodiment and 'situatedness'. These design principles feature prominently in the best contemporary cognitive models.

Synchronous structures, both proximal/subject/self and distal/object/other, are modelled as analogs of bodily posture by Moore machine ROMs (this is atypical example of 'embodied' computation). After input is received, state change must wait for saccadic sample sweep - equivalent to microprocessor's clock pulse - to occur before output is emitted, at the same time as state changes, synchronously. Asynchronous structures, both proximal/subject/self and distal/object/other, are modelled as embodied analogs of reflexes by Mealy machine ROMs. As soon as input is received, output is emitted, all on the same state transition arc - don't need to wait for feedforward sampling signal -such as a clock pulse in microelectronic circuit design. This makes TDE/GOLEM an embodied computer design, an information modelling approach promoted by famous roboticist and fellow Flinders University alumnus Rodney Brooks.

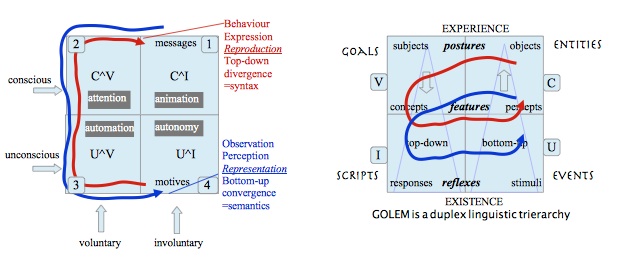

Semantic Computation (i-language) involves coding with sets (types) not elements (exemplars)

The neuroscientist, David Marr, reintroduced teleology (intended function, purpose) into cognitive science with his top-down, computation-first, trilayer analysis of the visual cortex (see [78] as typical of his work at the time). The linguist, Noam Chomsky, introduced the idea of linguistics as an internal, not external phenomenon- as the organisational principle underlying cognition's internal structure. Chomsky's analysis of programming languages gives a bottom-up structure to biological computation. Together, we can use the term Chomsky-Marr Trierarchy to describe the operation of the duplex information channels that make up the GOLEM, TDE's memory model.

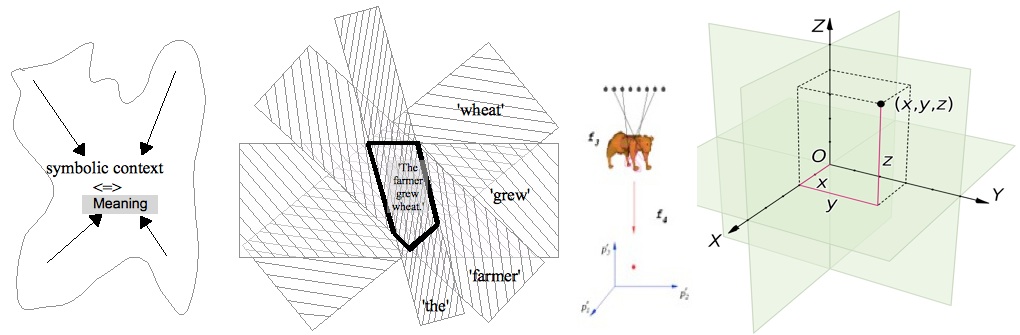

As an infant, we ride a steep learning curve, as we acquire all the words (and their meanings, of course) we require to perform as an adult. Consider this- the typical factory robot is usually loaded with a new set of specific spatial data each time its global task is changed, even if the same kernel program controls the general form of its end effector motions. No such detailed rewriting of data to working memory occurs in humans. Instead, by learning language first, our mind is able to produce and accept all semantic values ever required by manipulating contextually meaningful hierarchical locations. Language is a semantic (not syntactic) system, the syntax follows from the semantics, not the other way around. Ambiguity in language is not, as it is so often portrayed, an inconvenient side-effect. Rather, semantics works at the level of types (implemented as sets of possible candidate values), not instances, like existing coding paradigms. Each word denotes a set of semantic possibilities. By combining words in a sentence, we are solving simultaneous first order logic equations in as many unknowns as there are words. The backward chaining behaviour (solving Horn clauses) of Prolog is the closest current example.

It is the linguistic computation of this discovery, and not the neural science (see Part 3 'IMPLEMENTATION'), which I regard as the most controversial constituent of this solution.

Language- To understand it we need GOLEM, a memory model with conceptually separate motor and sensor channels

The reverse engineering of the mind's linguistic computational processes (ie by treating it as a 'language of thought' computer) resulted in the GOLEM pattern, a formalised version of the Marr-Chomsky three-level duplex fractal hierarchy. Language's ultimate purpose is to allow one person, the speaker/writer, to temporarily place another person, the listener/reader in 'their (the speaker/writer's) shoes', if you will. Episodic writing of data to the semantic memories (data hierarchies) occurs declaratively, each time we understand novel meanings in the speech we hear or read. Human language can be understood as a sort of computer code, but cybernetically oriented. Each sentence, as it's syntax is being constructed in echoic memory, describes the possibility set of logical changes to the subject::predicate links maintained in the semantic store, normally in the right cerebral hemisphere. Note that GOLEM's computational behaviour is sometimes unpredictable, therefore its execution model is partly non-deterministic[63].

For each familiar subject (people and some things) we construct an internal model, recursively maintaining its predicate lists (dependent sub-trees). All of this occurs at too great a speed to permit real-time, on-line, systematic modification of synaptic conductances. In humans, the ability to understand language as a 'dependency' grammar, permits us to store the hybrid, complex configuration of our social and physical surroundings in (mostly) the right cerebral hemisphere, something Tulving calls 'semantic' memory, and others refer to more abstractly as 'state'. This process of updating our knowledge of the STATE of our environment occurs automatically, as we understand the language we hear or read. Indeed, that is what we mean when we say we 'understand' a word, sentence or paragraph. However, because our minds are embodied biocomputers, we cannot fully comprehend the meaning of (inter alia) these changes in global state, because although they represent declarative data (explicit knowledge), most of the changes they imply must be implemented at the lower down levels of implicit memory. We must convert them into implicit format by means of sleep, a process which is, formally speaking, very compilation-like indeed!

Inevitably, when one discusses language and computation in the same breath, one must involve linguist and political activist, Professor Noam Chomsky. This website criticises Chomsky, but the reader should understand that this author owes an enormous debt to Chomsky for much of the ground upon which he stands, and from which he may, somewhat self-consciously but not without good reason, throw the odd handful of dirt in the great man's general direction. TDE/GOLEM theory is avowedly semantic in flavor, a fact which clashes with Chomsky's syntax-based approach to understanding language. I think Chomsky's so-called Minimal Program is misguided, but my approach to biocomputation couldn't exist without Chomsky's hierarchy of programming language levels! Am I a critic or a fan? Honestly, a bit of both. Like Skinner before him, Chomsky's contribution to this field is so enormous, that one must specify in great detail just which part of his theory one regards as moot.

Subjectivity- A Cybernetic approach to phenomenology

As important as linguistics is to TDE theory , just as great emphasis must be placed upon the ABSOLUTE NECESSITY of adopting subjectivity as a sine qua non in all subsequent theorising about Strong AI and its related sub-disciplines. The fundamentals of subjectivity were stated explicitly by Uexkull in the 1930's, from general ideas first published by Kant a century before. They were rediscovered in the 1970's by Bill Powers, who condensed them into a easily remembered and applied formula called Perceptual Control Theory (PCT). They were then re-rediscovered from first principlesby M.C.Dyer (ie yours truly) in the early 2000's. While Uexkull got the idea from Kant, I derived it by thinking about Common-coding and the ideomotor principle, ideas first raised by James, Wundt and others in the 19th Century. This stuff has been out there for a long time, just waiting for someone curious enough to search the archives. In particular, Herbart's solution [68] is not significantly different from the TDE, but is 180 years old!

Until now, the very idea of phenomenology has been a fraught one, highly problematic at best. What science has needed is some methodology by which the VIRTUAL domain(ie entities and concepts in phenomenological space) can be regarded in a similar way to the PHYSICAL domain. A crucial part of the research was the invention and credible conceptualisation of precise, non-wishy-washy definitions of both volition and consciousness, forming the paired orthogonal components which comprise the key dimensions of subjective human experience. I believe this goal has been achieved by my technique of conceptualising subjective space by decomposing it into a pair of orthogonal dimensions thus: (volition X consciousness). Cybernetically speaking, the volition component is a feedforward (open loop, strategic) one, while the consciousness component is a feedback (closed loop, tactical, reactive) one. While many cognitive theorists are happy to consider consciousness as a key part of phenomenology, they are by no means as comfortable with volition (conation). The reasons for this stem from the collective difficulty that science has with the incorporation of teleology (goals) into current philosophical frameworks. [77] gives an excellent introduction and overview of what is a very complex problem and consequently has become a deep-rooted bias in current scientific opinion. Without a clear vision of the key role that goal-orientation in general, and volition in particular, plays in phenomenology (a.k.a. subjective cognition, qualia, psychophysics), one is simply unable to construct straightforward theories about a wide range of sub-topics, ranging from interpretation of psychophysics data (eg Libet's Paradox) to constructing sensible, non-circular theories of organismic cause and effect (eg efference copy).

Until this step is UNIVERSALLY accepted as a valid one, the virtual sciences (ie phenomenology, subjectivity, etc) will always seem less 'real', less legitimate, than physical sciences. In fact, quite the opposite is true - all the (supposedly 'hard') physical sciences exist in a layer above, and are therefore entirely dependent upon, the existence of a lower (supposedly 'soft') substrate containing virtual quantities and entities- typically, multiple human consciousnesses and their historical interactions, the things we call 'self', 'society' and 'civilization'. This cannot be disputed, because it is a restatement of the obvious, hiding in plain sight- in other words, before big-S 'science' (the human institution of rule-based, data-driven examination of our shared reality) there must first have been the shared habit of introspective observation, the writing down of all the most intelligent of our thoughts. This tradition in turn could not exist without the evolution of human-type intelligence! This historical/biological reality forms the basis of Ada Lovelace's original objection to the idea of intelligent machines. Restated, this is the claim that no matter how intelligent each machine examplar is, collectively they are the product of a pre-existent entity, namely human civilisation and technology.

The Complete Vision - an Emotional Computer with peer agency

Emotions are what 'drives' us, literally. They decide what we attend to, what motivates us, and how we respond to complex, hybrid combinations of internal and external challenges. Emotions are the hands of the self, operating the steering wheel of the mind. The left hand symbolises the feedforward aspects of motivation, and prediction, while the right hand symbolises feedback aspects of reaction, and appraisal. As such, emotions can undoubtedly be considered to be the 'jewel in the crown' of human cognition.

The precise formulation of emotion that emerges from the two-factor(C x V) parameterization is not merely a desiderata, but a logical consequence of the TDE's fractal, tri-level architectonics. This formulation, though mathematically simple in the extreme (two orthogonal binary axes is as basic as Cartesian models get), nonetheless makes sense of a most challenging topic, one that is right at the cutting edge of current research, namely subconscious emotionality. TDE theory represents the first really scientific investigation of Freud's original vision of the 'subconscious mind'. When the fractal nature of the TDE architecture is examined, it can be seen that each of the 3 orders of the tri-level TDE fractal has associated with it a command-control differential which we interact with subjectively as first-person experience 'embodiment', 'conation' or (conventionally) 'emotion', depending on which of the three TDE levels is under consideration. Cybernetics, which is usually defined as 'the science of systems control and control systems, can equally well be described as the analysis and synthesis of systems based on their central use of conative (ie drive state) gradients or differentials. Clearly, this discovery could not have been made without the cybernetic perspective taking a primary role. Indeed, if Computation had been properly included within the Cybernetics domain from the very beginning of its inclusion in so-called Cognitive Psychology, the field would never have lost its way to such a catastrophic extent, for such a long time, and my discovery would already be an integral and indispensable part of everyone's mobile phone.

Part 1 - Solution

1.1 Research Aims and Context.

1.2 Autonomic Principles in AI; TDE is a cybernetic autonomaton

1.3 TDE and Endel Tulving's knowledge map; TDE-R = 1 x TDE1 + 4 x TDE2 + 16 x TDE3

1.4 GOLEM is a memory-based equivalent depiction of the TDE

1.5 Neuronal Linked-Loop State Machine NOT Hyperconnected Synaptic Function Machine

1.6 Philosophy = logic + phenomenology. Celebrity philosophers have undue influence on field; introduce term 'Biological Intelligence' =BI; GOLEM adds subjectivity to Dennett's idea of narrative stream of consciousness = computer program;

1.7 TDE Electrophysiology- EEG data is syntactic, not enough for causal model;GOLEM uses neural ROM windowing to explain EEG spectra;

1.8 Learning I. computational purpose = representation and reproduction (modelling); software model = meta-mechanism;

1.9 Learning II. declarative (trace) vs procedural (delay) data represents a non-verbal test for consciousness;sleep = compilation, both convert explicit data (ramification-free format) into implicit data (efficient execution);

1.10 Frame Problem, TDE Theory of Belief Semantics. logic, truth, information, data and knowledge (= meta-information) are all based on the same underlying concept (thresholding, Expected value or T-values);leads to neural ROM as only possible candidate, same as Grossberg's 'match' memory; high-level logic vs low-level learning;K-maps and Don't Care's can identify ramification issues (frame problems)

1.11 General Approach to Cognition must include Teleology. A complete theory of language does not exist, so it had to be invented;

1.12 Identity Problem- solution to Mind-Body Problem AND solution to Mind-Body Problem Problem (sic); intelligent computation only requires two data structures- hierarchy and sequence;

1.13 Linguistic-Semantic Computation Theory I - Language = Semantic-Mnemonics, with words = Labelled Sets of Salient Stimuli. Conventional SIMD computation is insufficiently powerful to explain many of the feats of BI (biological intelligence), hence it is suggested that biocomputation itself is linguistic. If the 'computer is the language' and vice versa, then language no longer becomes the great differentiator between animals and humans. Language use then becomes a (highly useful) add-on. The limit to this process is the level of consciousness of the animal. eg bonobos can use a large lexicon, but cannot use gestures to indicate other than present tense

1.14 Linguistic-Semantic Computation Theory II - Critique of ideas relevant to GOLEM in Julian Jaynes' book about evolution of bicameral mind'

1.15 TDE level 3 = solution to Chomsky's deep and surface syntactic structuralism. TDE-R and GOLEM represent two complementary views of brain/mind/self. TDE-R developed from Tulving's CNS knowledge map.

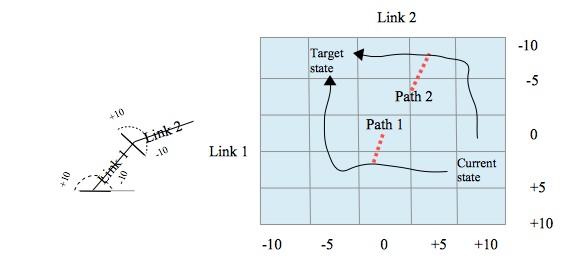

1.16 TDE as posture/reflex state/transition ROMachine. There are 16 TDE1's (16 TDE's at TDE-R level 1), which encode posture states (via synchronous moore machines) and execute posture map traversal reflexes (via asynchronous mealy machines)

1.17 Neuroarchitecture- Moore m/c implements voluntary, scripted aspects of computing, while a Mealy m/c implements its more automatic aspects

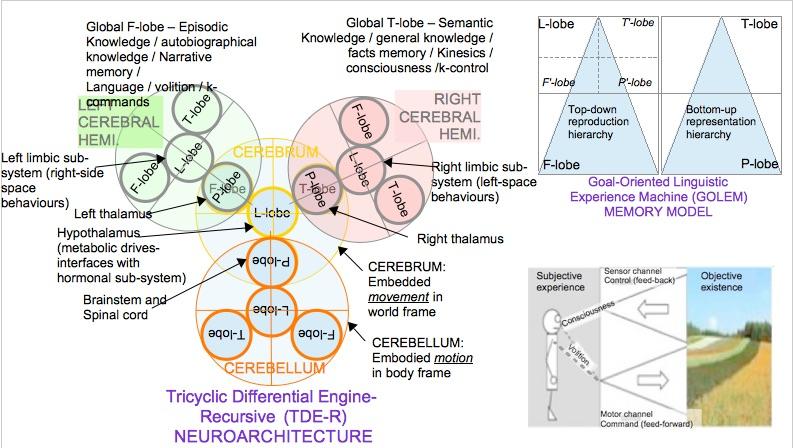

1.18 Neuroanatomy I - Effects of fractal scale on the entity type (ie TDE-R level number) modelled

1.19 Neuroanatomy II - Hierarchical divergence and convergence in GOLEM provide a non-circular way of defining semantics and syntax.

1.20 Autobiographical Case Study - Robotic design mimics Hughlings-Jackson Architecture (HJA)therefore demonstrates embodiment The Digital Arts Motion-Control (MoCon) Rig - putting practice into theory

1.21 Posture-Reflex level Behaviorism - Semantic grounding in basic arthropod design, relation to GOLEM

1.22 TDE as fractal duplex information processor

1.23 Narrative as a cognitive structural knowledge layer

1.24 Logical Language, Cognitive Linguistics vs lionguistic Cognition

1.25 Set-based coding; Programming with Types - like Prolog, Golop is 'common coding' - circumscriptive not proscriptive, not WHAT TO DO (tasks) but DO TO WHAT (types)

1.26 advanced cybernetics topics- I. new theory of cerebellar cognition by common-coding II. that thar new-fangled 'heterodyne' sure looks like a good ol' Kalman Filter, don't it ma?

1.27

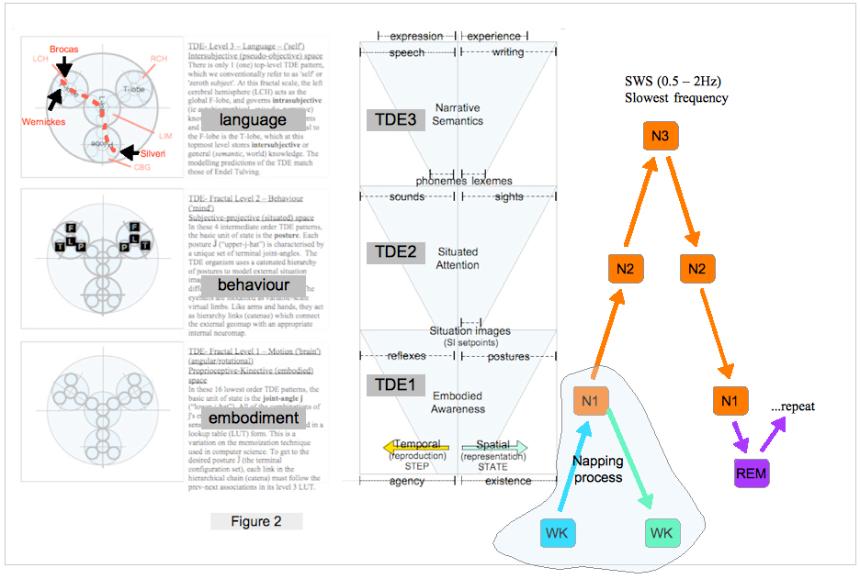

Figure 1 TDE-R fractal tri-level architecture diagram

Figure 2 TDE-R posture map formed at TDE1 level - all motion is semantically grounded to trajectories joining points on the posture maps

Figure 3 GOLEM duplex hierarchy - internal detailed functions

Figure 4 GOLEM data structures - comparison with the four lobes in the TDE computation template.

Figure 5 PEGS diagram summarises GOLEM plasticity which is based on minterm-maxterm predicate/first order logic architecture

Figure 20 equivalence between semantics and dimensionality

Figure 21 Cybernetic master (canonical) diagram - the heterodyne, the top-level emotional controller

1.1.1 The aim of GOLEM theory is to provide a satisfactory technical answer to the non-technical question- "How does the mind work?" -[Q1] The development of this theory is more-or-less 'classic' reverse engineering- given an artefact, derive its functional roots. In 'Brainstorms', Daniel Dennett states that .."what makes a neuroscientist a cognitive neuroscientist is the acceptance..of this project of (top down) reverse engineering" -note that some commentators use the term 'outside-in' for 'top-down'. The main idea, that of progressing from ends (overall function/ purpose/ teleology) to means is usually attributed to neuroscientist David Marr, but is of course as old as thought itself. Dennett also provides the reason why reverse engineering cannot be bottom-up:- such exercises are fundamentally under-determined (this author has already complained about the relative folly of www.bluebrain.org and humanconnectome.org).

1.1.2 Although the science of mind is inescapably complex, and though the terminology is not just confusing but is itself confused, GOLEM theory claims to provide the closest thing we have to a 'straightforward answer' to Q1. The major source of complexity is that there are many interactions between systems whose functions overlap, producing a matrix of meanings with multiple names and ambiguous labels that must be explained more-or-less simultaneously. This problem of 'many different names for the same darn thing' represents as great or greater a threat to finding the answer to Q1, as the philosophical issues (qualia, etc) usually put forward. This complexity presents a problem which was not initially apparent, but which has since arisen. This problem is as follows- cognitive scientists and philosophers tend to rely on words to describe concepts, with pictures in a secondary role, used to clarify or summarise a point of argument which is already in the text. However, the inherent multiplicity of the mind's operation is so great that the use of diagrams as a PRIMARY mechanism for disambiguation is unavoidable. Words are deliberately ambiguous, by design, unless great pains are taken to use them precisely. Indeed, it is precisely this aspect of language - that words describe semantic sets, not singletons - that forms part of this research.

At some point, using a diagram or graphic instead of text becomes a more efficient use of the author's energy. Semantic ambiguity is a 'device' in literature, but when encountered in the sciences, becomes a chronic problem, one which well-crafted diagrams do not suffer from. Where some kinds of generalities must be described, words suffice admirably, but in those situations where sufficient complexity at the abstract level exists, an appropriately specific graphic has been employed. In other words, diagrams are often the only way to represent concepts that are both very complex and very abstract. To employ text in that role would necessitate frequent use of 'unreadable' (eg deeply nested 'garden path') expressions[20]. For example, graph theory is literally unimaginable (impossible to discuss and use) without being able to draw pairs of vertices in space together with the edges which link them. The use of diagrams (in conjunction with text) as a central explanatory mechanism is therefore a key factor in the successful creation and evolution of the TDE/GOLEM model (see Figure 1).

1.1.3 According to Kopersky[37], there are three main barriers to fully autonomous computing. The first is that conventional computers are not truly semantic engines like brains, but merely syntactic manipulators. Kopersky reminds us that the main purpose of Searle's Chinese Room[38] (however flawed and impracticable an idea), is to remind us of this lack of semantic credibility. This basic flaw in reasoning about computation reminds us that the same mistake was made much earlier about language. Frege, Russell and most notably Carnap [58] remind us that mathematical logic is an entirely different instrument from evidence-based logical reasoning about the real world.

The second barrier is the frame problem, which is concerned with keeping track of the state of predicates that are assumed constant (sometimes falsely) during the execution of a particular computation. The Frame Problem and the Dining Philosophers (a.k.a. the readers and writers' problem) are really analogs of one another, because they address the ultimate issue of managing seriality in a concurrent world.

The third barrier is something that Kopersky calls the 'overseer problem'. This is the issue of goals- who (or what) provides the computational goals to a truly autonomous computer? Well, the answer must be- the computer does! But how? Ultimately, nature evolved consciousness to resolve this issue of ultimate (recursive) autonomy. More practically, many millenial researchers (who were probably born after 1980 in order to graduate after 2000) have assumed an agent-based approach as per the most common textbook[6] , meaning that they adopt a subjective (robotic) stance as a default assumption. These lucky people have, in my opinion, 'dodged a bullet'. For some of us, our intellectual progress in the last century was impeded by the intransigent stance on teleology adopted by the 'mainstream' scientific establishment. The following example question might help to clarify the issue. What is the purpose of a heart? Most of us would reply reflexively, 'to pump blood'. But says who? Sure, the living heart pumps blood. But it does other things as well. Trivially (unimportantly) it occupies space in the chest cavity. The very idea of a purpose is a matter of perspective, admittedly. But, I hear you object, that is not its main task. Again, says who? There are actually a bunch of extremely unscientific folk who claim a completely different primary role for the heart, publishing in third world journals[39] to escape scrutiny and ridicule. That they are able to publish such rubbish with relative impunity is a side-effect of the quagmire that mainstream cognitive science is bogged down in, a kind of anti-testament to the dire need to reformulate the way we think about all kinds of computing machines, including brains.

The key to this conundrum is the word 'perception'. Purpose may indeed be a matter of individual viewpoint, but that doesn't place it beyond the realm of computational formulation, it merely classifies it as subjective (from a particular user/agent's perspective) rather than objective (from no particular user/agent's perspective, from a shared viewpoint). Note how the word 'objective' also triggers the accidental connotation of meaning 'unbiased', implying 'more accurate'. The solution is as simple as smallpox vaccine- avoid the problem entirely by educating technology undergrads with an agent-based AI text like Russell & Norvig. To paraphrase Adolf Hitler, Education is the virtual Archimedian lever[41], with which to effect mass change.

1.1.4 By adopting a Marrian architecture on a fractal (multiple scales of magnitude) basis, all three barriers are simultaneously overcome. The adoption of the Marr model in this discussion is almost identical to that of Fitch. The topmost layer of the Marr model, the 'computational' or 'goal-oriented' layer, explicitly addresses the third barrier, the 'overseer' problem. By explicitly creating goal-oriented agent-based variables within the enclosing scope, the appropriate control of goal values can be passed to the command of the supervising executive module. These conditions constitute the minimal requirements of an efficient token-passing knowledge hierarchy. The lowest layer of the Marr model covers the issue of semantics and symbol grounding, the first of Kopersky's barriers, and the one addressed by the proponents of Embodied Cognition (EC), typically Brooks[40]. The GOLEM/TDE is an example of 'deep' embodiment, in that all of its computation, including off-line modelling[44], is semantically grounded in body motion primitives. The lowest Marr layer contains the 'alphemes' (eg phonemes or lexemes) - the building blocks of meaning. Before the infant learns to speak, it learns to recognise those sequences of sounds which carry most information, helping it predict what happens next. Quantitatively speaking, it is undoubtedly true that the human infant has the added assistance of one or both parents providing vocal 'scaffolding' to accelerate the 'learning of meaning' process. Nevertheless, both animal and human infants are faced with qualitatively identical challenges. As to the middle, algorithmic Marrian layer, its vulnerability to frame problem issues is linked directly to timely maintenance of knowledge hierarchies. As we shall see, the human brain uses the mechanism of sleep and the posture metaphor as its principal method of automatising state-change events (ie updating its current belief framework via the semes inherent in human language).

1.1.5 The so-called 'Mind-Body problem' (MBP) is not based on scientific (rationalist, materialist, empiricist) principles, therefore it will receive limited attention in this treatment, at least in the form attributed to Descartes. The MBP is a legacy of the religious world view that almost everyone once believed in, even scientists. For example, Charles Darwin still believed that God was the ultimate lawgiver, and later recollected that at the time he was convinced of the existence of God as a first cause and therefore deserved to be called a Theist, a view which fluctuated during his later life. He admitted to being an agnostic, and would reputedly go on a walk while his family attended Anglican Sunday Service [59]. Descartes came to the conclusion that there are two substances, matter and thought, and to paraphrase him rather glibly, 'never the twain shall meet'. He thought he was being rational, in his 'stove-heated room' (his favourite place for indulging in scholarly introspection), but he underestimated the rhetorical effect of religious teachings he received en masse during his rather traditional french aristocratic upbringing. Being told that there is a heaven and a god who loves you provides even atheists like me with a rather warm fuzzy feeling of safety, like being a child and living at home with your parents. No amount of scientific discovery can possibly hope to compete with religion on an emotional, irrational level. The triumph of religion is as much a triumph of irrationalism, of taming the natural human tendency to imagine ghosts and demons where really there exist only plain old everyday bad luck and our age-old microcosmic foes such as plague, cancer, and the bugs that poison spoiled foodstuffs. Where science shines, however, is providing satisfying emotional answers to the human yearning for deeply satisfying explanatory mechanisms, for baffling mystery to be transformed into clearly described chains of cause-and-effect. Cartesian dualism may be vaguely comforting, but it does nothing to satisfy our modern lust for empirical truth[60].

1.1.6 However, I present a solution to the 'Mind-Body problem' problem (sic). TDE/GOLEM theory offers insights into why otherwise rational people profess a belief in irrational jujuices like the Christian soul or the Animist's 'life force' (elan vital).

1.2.1 While the GOLEM design describes both animals and humans, the TDE-R applies only to humans. That is, YOU are a linguistic biocomputer, called the TDE-R. The closely related TDE is a canonical state-space engine which has a similar role to that of the Turing Machine. The brain, mind and self are the words we use to describe the three levels of the TDE-R. By means of these three levels, the mystery of consciousness can be converted to 'mere mechanism'. In popular media (eg radio programs on 'killer' robots) the commentators often discuss the topic of autonomy, without providing a functional definition. Autonomy means that the system (defined recursively, as a whole, and as each part thereof) contains its own characteristic set of behavioural goals. These goals must operate on multiple levels, because behaviour also executes at multiple levels, recursively, fractally. In other words, one cannot speak of autonomy without also specifying the level of goals that the robot self-manages. IF the robot self-manages all of its goals, AND all its inputs (including hormonal) are defined semantically, THEN it is an autonomous agent [37]. If humans manage some of those goals for the robot, for instance if they choose the target for a 'hellfire' self-arming drone, then the autonomy is shared. GOLEM/TDE-R fractal machine theory completely specifies all of the possible levels of behavioural/experiential autonomy. This theory cannot specify those aspects of human nature[17] which are dependent on individual preferences emerging from the primate part of our humanity. However, beware of placing a priori limits on its capacity. GOLEM theory may not produce an inherently 'kind' robot, however, the robot it creates MUST have a rudimentary version of empathy, to satisfy the functional requirements of TDE-R's level 3. That is, if it is programmed to protect its own 'self' structure, then it will also act protectively and think sympathetically toward those beings it regards as 'other selves', that is, beings substantially identical to itself. According to GOLEM theory, while TDE level 2 functionality gives GOLEM the power to predict third party motion (physical trajectories), TDE level 3 functionality gives GOLEM the added power to predict third party mentality (motion plans, or virtual trajectories). A robot with these capabilities will act intelligently and will behave according to 'ordinary' notions of self-aware autonomy. It will know that it is an 'I', and it will also know that you are also an 'I', but any non-subjective thing is just an object, an 'it'.

1.2.2 It can perform these 'everyday miracles' because the GOLEM/TDE-R design has completely resolved the issues of internal and external notions of language, what Hauser, Fitch & Chomsky [46] call FLB (Broad linguistic functions) vs FLN (Narrow linguistic functions) - see Figure 0.1. The three levels of the TDE provides a completely satisfactory, thoroughly causal explanation of language syntax and semantics, in contrast to the Chomsky Minimalist Program (CMP), which explains (for example) word order variation by reference to linguistic functions only eg applying the 'Move' operation in different stages of the derivation, either before 'Spell Out' or in the 'Spell Out–LF' stage. TDE/GOLEM theory (TGT) describes language in terms of common cognitive operations, that is operations used for non-linguistic as well as linguistic functionality. Therefore TGT avoids the criticism sometimes levelled at Chomsky's approach- namely it relies upon an argument which is basically ad hoc - its empirical 'fit' to the observed behavioural and structural features of human language is obtained by ultimately circular reference to the data is seeks to explain.

1.2.3 Where Chomsky relies on the manifest differences and latent transformations between D-structure (deep structure) and S-structure (surface structure), TGT linguistic theory works by applying some common computational principles[65]:-

(P1) - meaning/semantics is combinational, it is a function of which words are selected, not where the words are positioned.

(P2) Voluntary (controlled) computations are serial in nature, while involuntary (automatic) computations are parallel [SS77].

In the following comparison, we illustrate these rules by examining the sentence set containing the semantic elements {John, gives/receives, Mary, umbrella} . Serial structures are not inherently combinational, but permutational, because they rely on symbolic sequences for coding (obviously!). From high-school math, we know that P(n,r) = r! (C(n,r). TGT uses the following interpretation of this familiar formula- to compute all the possibilities of a combinational code, all one needs to do is execute these r! (=3! = 6) enumerated permutations. in parallel. In other words, six separate syntactic permutations, when regarded equivalently (equal narrative choice), are semantically equivalent to the {John, Mary, Umbrella} combination, or set. Note that, when examined at the 2nd (subjective behavioural) level of the TDE-R, each of the six syntactic variations adheres strictly to the familiar English constituency template Subject-Verb-Object (SVO). At the 3rd (narrative) TDE-R level, there are thus 6 semantically equivalent (ie depicting the same situation)choices the narrator/reporter can make, consisting of -

2 x active voice - John gave the umbrella to Mary and vice versa - in the active voice, the syntactical subject is the semantic agent

2 x passive voice - Mary was given the umbrella by John and vice versa - in the passive voice the syntactical subject is the semantic patient

2 x neutral voice - The umbrella was given to Mary by John and vice versa - in the neutral voice, the subject is the semantic instrument[66]

1.2.3 Conventional linguistics is concerned almost entirely with syntax (grammar) and its relationship to semantics (meaning, contextual reference). There is another, third, element which I call symbolics. The advantages of including this extra detail are (i) the triad of terms now completes the formal requirements of a Marrian hierarchical recursive trilayer (see Table 1). The basic concept is easy to understand. In the most familiar linguistic domain, where words are formed into meaningful sentences, the ignored symbolics layer contains the permutational (spelling) rules that govern the ways that coarticulants (phonemes) are spoken to successfully form each word. Note that words are the symbols (coded generic meaning) that make up each sentence. The words and the sentence are both semantically valued, but the layer in between is not- it is the syntax layer. In free word languages like formal latin, all words belong to categorical groups, called declensions for nouns, and conjugations for verbs, according to a grammatical property called 'case'[64]. For example, the genitive case ('of' something, someone's X) conveys the meaning of belonging, ownership or governance. Having found the right case for each word in the proposed sentence, word order then becomes literally meaningless .

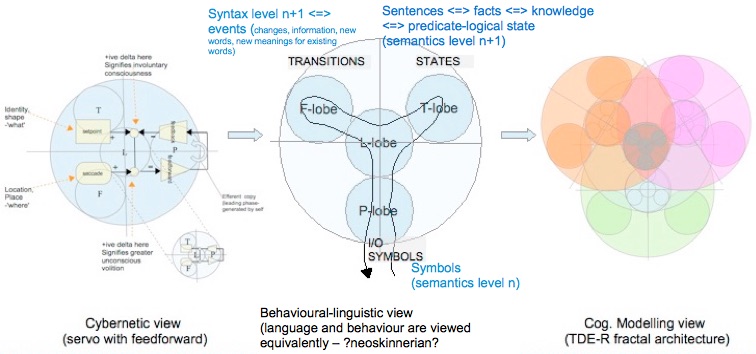

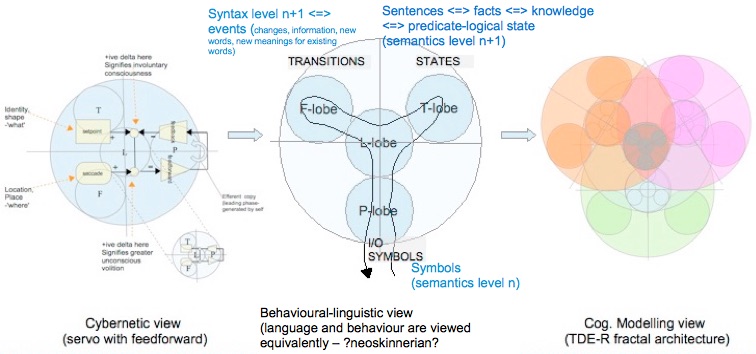

1.2.4 The GOLEM and the TDE-R are related in the same way that a Turing Machine and computer are related. The GOLEM is free of confusing morphological (i.e. anatomic) detail, so the underlying data storage mechanism (state implementation) can be clearly observed. The GOLEM has two channels, output (effector, motor, conceptual, synthetic, subject) and input (affector, sensor, perceptual, analytic, object). GOLEM uses Behaviourist associative learning mechanisms (classic conditioning, operant learning) which operate using only two abstract[22] mechanisms- (1) stimulus (a thresholded feature detector, feedback information, loop closer) and (2) response (a thresholded<ie triggered>, feedforward, open loop, motion or pattern generator). These two 'primitives' or building blocks can be linked to each other, to form a reflex, the GOLEM's minimal algorithmic unit. The idea that the reflex implements is a familiar one:- IF stimulus THEN response. Sets of stimuli with very similar triggering thresholds can also be linked into 'postures', the name used for GOLEM's compound data structures. To implement controlled (voluntary, externally triggered) bodily motion, physical or virtual[20], GOLEM uses the idea of the servomechanism, a declarative, common-coded, data driven, programming methodology which views all consciously controlled movement as an animated sequence of static postures. To implement automatic (involuntary, internally triggered) bodily motion, GOLEM uses the idea of a recursively nested hierarchy of self-triggering reflexes, equivalent to the shell script. Controlled and automatic descriptors were first introduced by Schneider & Schiffrin (1977) [23], who used them to mean voluntary and involuntary.

1.3.1 Although the GOLEM (abstract biocomputer) and the TDE-R (its neuroanatomical[32] implementation) are described separately, the reader must try to keep in mind that we are referring to two different views of the same thing. No such identity exists when the difference between a 'normal' computer and a linguistic biocomputer is considered. Language, when considered as a data structure, has a unique property- although it is constructed of a finite number of constructional elements, it has an effectively infinite number of expressive forms. This property, called language's infinite 'productivity', derives directly from its three layer hierarchy (3LH or 'trierarchy'). There does seem to be something special about trierarchies, as can be seen in Artificial Neural Networks which implement adaptation using back-propagation(ANN's). Two layer perceptrons cannot simulate functions (like XOR) which have disconnected regions (they are disconnected in two dimensions, but connected in three, of course). However, three layer perceptrons (3LP's) do not suffer from this fault, and can theoretically implement almost any function, which goes a long way to explaining why this is the kind of ANN is used in the vast majority of connectionist solutions. The three levels of the linguistic biocomputer are shown in different colours in figure 1.

1.3.2 The research started with Endel Tulving's [1]top-level view of knowledge sub-types, which is yet another trierarchy. First Tulving compared episodic to semantic, then declarative (=episodic + semantic) to procedural. The top layer of Tulving's trierarchy stores episodic knowledge (i.e. what is commonly called 'memories' or significant events), the middle layer stores semantic knowledge (our fact base, our mind's implementation of 'prior state') and the bottom layer implements procedural knowledge ('skills' like riding a bike, use a pencil, knowing how to coarticulate phonemes- i.e. say words at normal speeds, in fact, do anything physical).

The next, crucial, step was to map Tulving's scheme to CNS neuroanatomy. This step, which is more fully described in www.tde-r.webs.com , yielded the following solution- an abstract tetrafoil (four-lobed) cybernetic machine called the Tricyclic Differential Engine (TDE). There are two TDE's, the abstract machine itself, the TDE, and its multi-level anatomical implementation, called the TDE-R, where the R stands for 'recursive'. The TDE-R is a 'fractal', a self-similar (i.e. spanning several size scales) structure. In the original TDE-R, there were two levels, local and global. In the revised TDE-R, however, there are three fractal levels, lets call them TDE1, TDE2 and TDE3 [14].

1.3.3 There are 16 TDE1's, 4 TDE2's and a single TDE3. Lets take them one level at a time. Each TDE1 is a movement processor that keeps track of all the quantised angular motions of torso and limbs in one of the 16 virtual copies of the organism's multi-link body. Is this idea supported by observational data? Yes. We have seen that multiple overlapping copies of shapes similar to Wilder Penfield's cortical surface 'homunculi' have been observed on the surface of the cerebellar cortex[13]. Each of the 4 versions of TDE2 have contained within them 4 copies of TDE1, three of which represent quite different aspects of cognition, namely, Tulving's three knowledge representation levels (the four one was missed, or ignored by Tulving). The interpretation placed on these internal copies of TDE1 is that they semantically ground their containing TDE2 (a knowledge base, or 'mind') in virtual movements(see [44]). Equivalently, they implement common coding/PCT principles by converting virtual movements into percepts (projections of reality). Therefore semantic grounding and common-coding (a.k.a. perceptual control theory, or PCT) are properly viewed as 'dual' (mutually inverse or complementary) sensorimotor functions.

1.4 No matter what level is being discussed, whether level 1, 2 or 3, each of the TDEs is a Finite State Machine (FSM )which contains two Turing-equivalent machines, one for distance and the other for time [33]. The existence of a separate FSM for time concurs with Dennett's 'multiple drafts' idea, where time is not 'keyed' to the method of representation, but is itself represented as an independent parameter. FSM theory is often used for microelectronic circuit board design purposes, as Moore and Mealy ROMs, for example. This represents a potential problem, because in the case of GOLEM theory, it is being applied to mechanical systems such as those that exist in a clockwork mechanism. Figure 1 represents the second generation ('revised version') of TDE-R. The first generation TDE-R claimed its biggest theoretical success in that Tulving's knowledge classifications are accurately reflected in its functional projections of 'super sized' versions of frontal, parietal, temporal and limbic lobes. That is, conventionally accepted notions of the local functions of the four types of lobes (e.g. that the temporal lobe stores phasic patterns) scale up successfully to global functionality. However, the global TDE-R diagram clearly reveals a disconnect that the text description does not- a clear demonstration of the worth of a diagram over text description. This disconnect occurs between the central (global limbic) lobe and three peripheral (global frontal, temporal, and parietal) lobes. In the second generation diagram, this problem has been corrected, allowing the full promise of the diagrammatic method to be realised.

1.5 While the major part of the answer to Q1 is provided by the tripartite TDE-R architecture, it does not supply all aspects of the solution. To obtain a complete, rounded understanding of every facet of Cognition, four topic areas must be investigated and then integrated into the TDE-R presented in figure 1. The first of these areas is that of (1) neural architecture of circuits and layers under bio-computational constraints. By using the subjective framework independently described by Uexkull (1934), Powers (1977) & Dyer (2012), the duplex language machine called the GOLEM was developed. Its notable feature at the neural level is that it is constructed from Behaviourist stimulus-response units. All GOLEM behaviours are constructed from either posture sequences (slow, controlled motions- the behaviourist equivalent of the servomechanism) or reflex hierarchies. The second of these areas is (2) neuropharmacology. For example, any model that claims to be useful must successfully predict the behavioural consequences of ingesting a dopamine agent like amphetamine. Apart from specific predictions, GOLEM theory addresses the general issue of why global neurotransmitters exist at all. Briefly, the GOLEM explanation is as follows. Consider its general coding model which has serially processed postures at the high level, modelled by a Moore machine and concurrently processed reflex hierarchy, modelled by a Mealy machine. The use of pooled, or ganged neurotransmitters enables posture-like grouped activity to be implemented over small sets of neurons at a low level. These mechanisms are built from genetic and epigenetic instructions - functionally they are no different from ROM, or 'firmware'. they cannot be learned by conventional means, though their 'programming parameters' can be modified by behavioural-cybernetic methods, as first investigated by Tinbergen[43]. Low-level (parallel) code is faster than high-level (serial) code, all other things being equal. (3) State machine microsemantics. Earlier, mention was made of the problem of coherence of terminological semantics between sub-topics- for example, the word 'semantics' has slightly different connotations depending on whether it is used in a linguistic, or logic theoretic or formal grammars etc. The reason that one single definition of the word cannot be employed is simple- to arrive at the correct single definition would imply knowing all the details of an integrating cognitive theory. GOLEM is, it is claimed, a viable candidate for that role. Consequently, the definition of semantics as used in GOLEM theory is fractal - it is the correct general view to instantiate into all the subsidiary topic areas. One definition is, not unexpectedly, more canonical than the others. This 'root' or 'foundation' definition is the one used in the TDE finite state machine, and, of course, in the Turing Machine (they are substantially the same arrangement). In these state machines, semantics is given by the state-transition level, while syntax corresponds to the patterns of I/O symbols produced during edge transition operations. Working the other way, and starting with the FSM, GOLEM theory provides a satisfyingly rigorous definition of concepts like 'number' and 'set', ones which which are as nuanced as any that appear in a pure maths text. (4) Electrophysiology (EEG) of conscious (extrinsic learning-> explicit knowledge) and unconscious (intrinsic conditioning-> implicit knowledge) cognition. Although certain pioneers have experimented directly on living brains, notably Wilder Penfield, followed by Sperry & Gazzaniga, EEG readings form the largest part of our knowledge about the living working brain. GOLEM theory places them centerstage in its explanation for the so-called mystery of sleep.

------------------1.6----------------------(Back to TOC)

SOLUTION TO THE PROBLEM OF COGNITION (BIOLOGICAL INTELLIGENCE)

1.6.1 With the notable exception of a few 'celebrity' philosophers of language and cognition (eg Jerry Fodor[2]), whose recent careers are based upon controversy rather than validity, most mainstream researchers of human cognition believe the brain to be a computer, and the mind to be a set of coordinated information processes that closely resemble software. As to the nature of self, only GOLEM theory offers a plausible concrete solution. Notwithstanding the overwhelming nature of the evidence for a computational theory of mind, without a more conclusive demonstration of structural and functional verisimilitude between brains and computers, these same mainstream researchers are reluctant to take the final step and announce the problem as solved. It is the ambit claim of this research to have resolved all of the major philosophical and pragmatic objections to the realisation of strong-AI (or AGI, if you prefer.), thus opening the way forward to the implementation phase. For those readers who are being exposed to GOLEM theory for the first time, please refer to the previous websites named in the preface. If you are reading this in all seriousness, and are willing to accept its claim at face value, then you want to proceed as quickly as possible to a testable prototype. Before proceeding further, something must be said about the profligate use of neologisms in this discussion. They are regarded as required, but regrettable- a 'necessary evil'. In highly technical matters, plain speaking has as much use as open-toe sandals on the moon. Take 'BI' for example. BI has rather obvious provenance w.r.t. its 'sister' term, AI (Artificial Intelligence). The intention in using such non-standard language is to provoke the AI/CogSci community out of their institutional inertia, and complacent defeatism, into an energetic, coherent and detailed response to GOLEM's unique set of innovative features. Once the design has been professionally coded and debugged, it should possess functionality that is significantly superior to other human cognition simulators, eg CLARION (which it seems to resemble more closely) and ACT-R, SOAR etc (which it has less in common with).

1.6.2 The biggest advantage GOLEM theory possesses is that it represents a genuine solution to subjectivity (also known by a bevy of euphemisms such as psycho-physics, phenomenology, qualia etc. ). That is, it simulates internal, first-person experience by possessing exactly the the same data structures and algorithms as do real human minds. This is a bold claim indeed, one which can be ultimately tested only after a working prototype is made. However, sufficient technical detail is presented in this website to allow critical analysis of the design's underlying philosophy and core science in advance of its production. This research endeavours to complete the explanatory journey started by Daniel Dennett in 'Consciousness Explained'. There is a certain 'negative' narrative associated with treating human cognition as a 'mere mechanism', suggesting subliminally as much as state openly, that human cognition may never be solved, may even be unsolvable. These comments do not come from a lunatic fringe belief of zealots, but from some career scientists who, blockaded by the siege engines of unreasonable doubt, have decided not just to play safe, but to lay down and to play dead. Given the pathological level of doubt which exists around mainstream 'meat and potatoes' approaches to speculative (incl. theoretical) science, even within reputable journals, it is not surprising that 'alternative medicine' approaches have emerged apart from the ('mainstream') engineering-oriented one taken in this discussion.

1.7.1 One of these non-standard approaches involves focussing on the EEG signals and whether or not they represent sufficient primary evidence of cognition all of their own. Elliot Murphy, in Frontiers in Psychology (Language Sciences) wrote a comprehensive assessment of this approach in 2015 called 'The brain dynamics of linguistic computation'. Murphy's approach depends on correlation (things happening at almost the same time) rather than causation, and therefore are not deserving of the term 'explanation'. GOLEM theory itself suggests some reasons why. While observing things is the job of the bottom-up, sensor-side information channel (which generates representations which increase their degree of meaning as they ascent the perceptual hierarchy) understanding things requires the two channels to interact cooperatively all the way up GOLEM's duplex information hierarchy. To understand something properly is to restate it 'in one's own words'. This advice can be generalised to mean re-expressing the core semantics using one's own individual syntactic codes, the internal system of names one has developed for correctly labelling things and groups of things. Returning to Murphy's EEG-based analysis of mental activities, this represents a purely syntactic exercise, without any way to allocate computational semantics to the patterns (and patterns of patterns) that emerge from advanced mathematical treatments of the data. Without having an empirical metaphor, ie mapping each particular group of EEG signals to the key parts of a known computational mechanism, such as a Turing Machine or T.O.T.E., no truly explanatory (that is, one containing causal mechanisms) account of the data can be constructed. Searle's Chinese room [34] is a kind of precautionary metaphor, one which is typically used to demonstrate this very point - that syntax and semantics are independent properties. Syntax cannot replace semantics. While intelligent systems can infer much about the nature of the world in which they find themselves, they must be equipped with a semantic foundation, a basic 'alphabet' of semantic primitives with which to construct increasingly abstract feature vectors, each one a new 'meaning'. Human infants are indeed born with a LAD, but it must be supplied with a set of common, grounded canonical proto-meanings. In the case of the TDE-R, these are the sequences of joint angles contained in the 16 TDE1's posture maps (posture tables).

1.7.2 In fact, EEG signals form an important part of the GOLEM model. They are 'ganged' inhibitory 'gating' signals, and act in a way not dissimilar to the scanning signal on a sweep generator (see variable labelled 'T[ganged]' in figure 11). The implication of this arrangement (called GOLEM T-logic) is that neural circuits are ROM's, or 'Read-Only Memories', also known as 'maps'. In point of fact, the idea of a map is inherently 'read-only'. The first task for the person (or ant or bee or migratory animal) who discovers new territory is to map it. This means 'writing' {position X feature} data onto a spatial framework[14]. Subsequently, those who aren't explorers (driven by curiosity and the desire to be famous), but exploiters (driven by profit motive and the desire to be powerful), use the maps in a read-only process called 'navigation'. Like the chauffeur and mechanic of a limousine, who are separate people, with separate domains of concern, it makes good 'system-sense' to clearly separate mapping (learning) from navigation (behaviour). ANN's which rely on iterative adjustment of synaptic conductances for each and every solution required clearly violate this tried and true principle of system organisation. In a manner which should sound familiar to any good electronics engineer, the faster sweep (EEG) frequencies in the GOLEM implementation model gate the slower ones [16].

1.8.1 Subash Kak et al (2002) says.."The mainstream discussion has moved from earlier dualistic models of common belief to one based on the emergence of mind from the complexity of the parallel computer-like brain processes". This is a fair summary of what many researchers believe today, but though it may prove to be correct, it is not the 'fresh eyes' approach that I used. Rather, I looked at the issue of representation and modelling. What is a special purpose modelling device? It is one that can model (both in terms of its appearance and its function) many parts of the objectively (externally) defined codes. This is what 'hardware' is. A painting in an art museum satisfies one of these qualities (coded imitation or mimicry), but cannot portray change over time by itself. If there are multiple copies of the painting, all slightly different, then change can be represented- the paintings then form a ROM, or read-only memory. But just having 'hardware' is not enough. Imagine a modelling (representation+execution) device which is able to model other modelling devices (called 'mechanisms') using subjectively, internally defined codes- in short, a meta-mechanism! This is what adding a 'software' layer to the 'hardware' layer is.

1.8.2 Others have had similar ideas, of course. Van den Bos [12] starts with a comparison between 'delay' and 'trace' conditioning, the former being the familiar Pavlovian form, and the latter being identical except that the subjects had conscious knowledge of the temporal relationships between conditioned and unconditioned stimuli. It is widely accepted that the hippocampus is involved in declarative (trace) knowledge, but not in procedural (delay) knowledge. Bos asks whether such non-verbal comparisons might not form the basis of exploring consciousness in animals. After all, higher animals which lack language nevertheless seem to possess hippocampus-like brain structures. Bos has thus reduced a consciousness test to an anatomical check, not reliant solely on verbal report, a condition non-human animals will obviously fail to meet . He shows he is aware of the work of both Powers (1973) and Uexkull (1934), to which this current study also makes significant attribution. Briefly, Bos divides both mental and neural states into invariant (shape) and variant (contents) parts. This is precisely the division that GOLEM theory proposes, although it uses the terms 'representation' and 'reproduction' (otherwise known as program or code 'execution' in computer science literature). GOLEM theory also clearly distinguishes 'hardware' level meta-mechanism with 'software' level meta-mechanism. Bos does not make clear, however, the equivalence of 'modelling' and 'meta-mechanism', a factor which greatly assists deeper understanding of the mind-body issue. Both researchers ultimately arrive at similar conclusions - all mechanisms consist of a static, structural component (ie a framework), and a dynamic, procedural one (ie a set of moving parts). These mechanisms can be physical, eg a clockwork watch, composed of the chassis and the catchment, or virtual, eg forces in a bridge, which are divided into 'dead'/static/self-weight and 'live'/dynamic/traffic loads.

Note that meta-mechanisms must be connected to the 'outside world' (defined recursively). This implies at the very least the existence of two sub-mechanisms (a) observation (b) action. We usually call sub-mechanism (a) 'learning', but in its minimalist form, it supplies the 'feedback' which turns mere blind, open loop ('feedforward') action into guided, closed loop ('feedback') action, or experimentation. In Behaviourism, observational association is called 'classic conditioning' (CC), while adding feedback to CC yields the experimentation mode called 'operant learning'. Whatever the wider implications of Behaviourism, it shares these two associationist (automatic) learning modes with GOLEM theory. That is, GOLEM theory (which purports to be a 'real' model) supports these aspects of Behaviourism as also being 'real'.

1.8.3 Any machine capable of exhibiting intelligence is composed of four crucial components. Firstly, there are many memory places with which to simulate meta-representations- eg create appearances via pictorial codes. Secondly, there is a CPU with which to execute function (modify those meta-representations over time). Thirdly, there are methods to implement reciprocal cause and effect, that is, interact with the 'outside world'. GOLEM theory uses the standard behaviourist 'atomic' concepts of stimuli (environmental effect events) and responses (environmental causal actions at the 'existence' level). Finally, the idea of hierarchical memory codes must exist, with which to encode syntactic (motor-side) and semantic (sensor-side) data and knowledge, and permit abstract recombination of the existential (stimuli and responses) into the experiential (object and subject). These four factors are necessary for intelligence, but not consciousness, which requires a third layer of meta-meta-mechanism. All three layers appear in figure 1.

Comparison between GOLEM and conventional computers

1.9.1 The computational solution described in this website is called the TDE/GOLEM Theory (TGT). TGT is a combination of features shared with common-or-garden computers and features that don't appear in any current computer design. The most outstanding example of the former type is compilation, that is, converting high-level (scripted) descriptions of novel functionality into low-level (executed) code. Another example of the first type is the self-similar (ie fractal) nature of the GOLEM computational architecture, which is produced by the recursive application of the TDE pattern- see Figure 1. This is nothing if not a hierarchical file system, exactly like the one used by every modern OS (eg Windows, Unix, MacOSX).

Sleep permits use of hybrid (predicate/first order + propositional/zeroth order) logic

1.9.2 GOLEM theory suggests that sleep (in brains) and compilation (in computers) are both manifestations of a common underlying functional purpose- the implementation of hybrid (predicate and propositional) logic. GOLEM's use of a technically conventional compilation process illuminates the logical conversion mechanism that underpins all compilation. In other words, TGT suggests that compilation performs the same essential function to both computer systems and human cognition. Compilation allows adaptation to be as free as possible from frame-problem issues, by permitting the use of 'high level' (ie globally consistent) logic to describe changes in subjective operating environment to be input to the adaptation process. Compilation in the most general form is a conversion from the predicate (first and higher order) logic of narrative thought/language into the propositional (zeroth order) logic of procedurally memorized behaviour/action. Explicit memory is exchanged for implicit memory. In brains, sleep performs a similar function by converting high level declarative events (episodic changes in semantic knowledge held in the cerebral hemispheres) into low level procedural knowledge (conditioned variations in reflexes and postures). Sleep permits the organism to combine the frame- ('ramification') problem resistance conferred by high-level predicate logic with the parallel-processing efficiency of low-level propositional logic.

Natural logic emerges from binary codes in knowledge hierarchies