Part 2 - Credibility

2.1 Best Strategy? - Generalist Specialists vs Specialist Generalists

2.2 How do I know I am right? Core Principles

2.3 How do YOU know I am right? Hirstein's Top Ten

2.4 CREDIBILITY = VERACITY (external truth) + VALIDITY (internal consistency)

2.5 SEMANTIC COMPUTING 1 - John Searle's Chinese Room

2.6 SEMANTIC COMPUTING 2 - Thomas Nagel's What is it like to be a bat

2.7 SEMANTIC COMPUTING 3 - Chomsky's Minimalist Program

2.8 Jerry Fodor's mid-career volte-face

2.9 under construction

2.10 under construction

2.11 under construction

2.12 Thomas Nagel's What is it like to be a bat

2.13 Chomsky's Minimalist Program

2.14 under construction

2.15 Baar's GWT

2.16 Rod Brooks' embodied cognition

2.17 George Lakoffs embodied/situated cognition

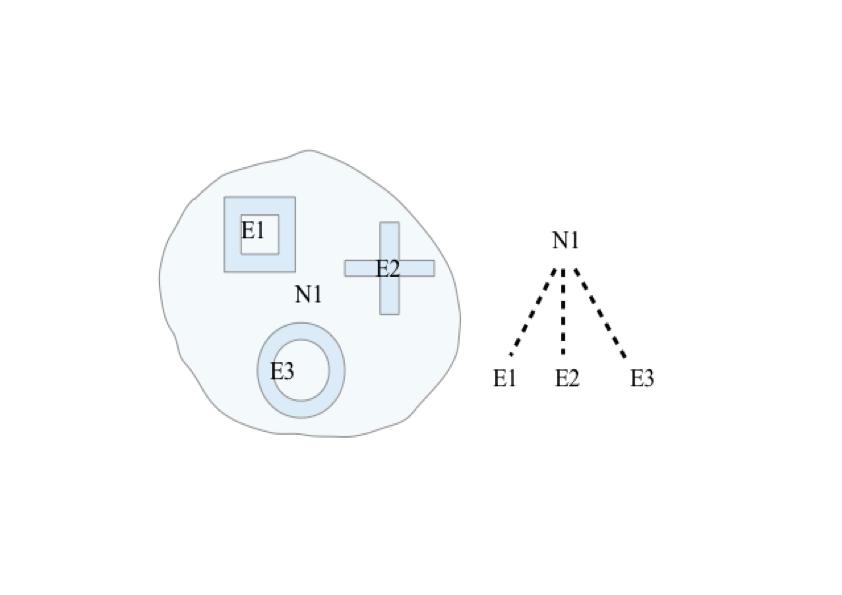

Figure 6 Every predicate logic variable, say N1, actually consists of a set of instance elements E1, E2, E3... This describes an internal viewpoint, similar to the function of Ken Pike's 'emic' classifier (eg phonemic/phonemes vs phonetic/phones)

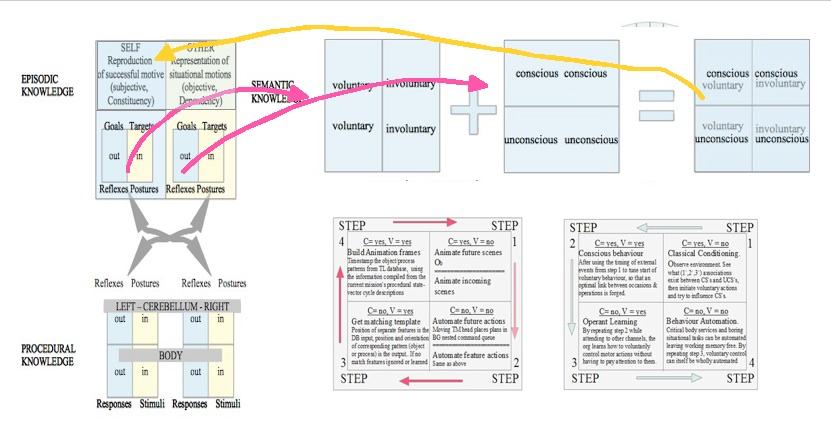

Figure 7 Lateralisation of the body plan is the 'great evolutionary step forward', forming the voluntary and involuntary aspects of behaviour

INTRODUCTION TO PART 2

In Part 1, ('SOLUTION'), I have presented my discovery, the TDE pattern, with little detailed justification. It is inevitable that you will have serious doubts and reservations about what amounts to an ambit claim. Therefore it is the task of this section, the second of three parts, to attempt to allay some of your fears and doubts by providing a rather more detailed argument, in which I tackle model building in the general case, as well as address cognitive science issues. I discuss what factors constitute credibility, and how I have explicitly addressed each of these. Finally, I list the principles upon which I rely in clear and unambiguous form. As well as the indispensible Occams Razor, I add something I call 'Holmes Shroud', my new name for ancient wisdom. The first part of section 2 is of a general nature. The second part of section 2 consists of specific criticisms targeted at other researchers, and the errors I believe they have made.

There is an 'elephant in the room' with us. That pachyderm is an inconvenient fact, the reality of my having solved a problem that had not only not yet been solved by my supposed betters, but was (and still is) widely regarded as being almost beyond solving, at least in the conventional sense. The 'conventional' or 'classic' solution paradigm to any given scientific problem is exemplified by Einstein's work, first on Special, then General Relativity [17]. The general identity problem (ie the mind-body problem with consciousness included) bears the same relationship to its special counterpart as does Einstein's theories. In a rather abstract way, the analysis of consciousness demands a similar kind of curved 4 dimensional 'cognometry'.

After some thought, I believe one clear advantage I not only possessed, but deliberately and explicitly developed, was my being a Specialist Generalist, and not, as so many CogSCi/AI folk seem to be, a Generalist Specialist (I hope this doesn't sound too confused - I know what I mean!). The reader is invited to speculate on the original reason why philosophers and philosophy originally came into being, way back in the medieval mists of time, when universities and monasteries were one and the same thing. Perched atop the occidental academic specialist knowledge pyramid, philosophers were required to observe, and then make judgements upon, the insurmountable issues that dog the more pragmatic and worldly subcategories. However, over time, philosophers have morphed into philosopher-kings and other even less functional hybrid incarnations, so that, when the time came to apply their collective wisdom to the mind, they were utterly ineffective. Meanwhile, another breed of person arose, the techno-savvy individual who is as pathologically curious as they are financially independent. A typical member of this group is the Engineer-philosopher, who is first and foremost a generalist who knows as much about, for example, the field of abstract computation as the history of industrial espionage. The 'normal' academic is focussed firstly on his regular promotion and salary packaging, an understandable concern for one who must shimmy up the slippery, snake-infested corporate ladder. Post-doctoral research, and even (shock! horror!) actual discoveries, come in as a distant second, third, or lower ranking in his list of life priorities. Even if everything goes well, and they somehow make room in their busy mid-career, married-with-children, university lecturer life for a genuine attempt to make new discoveries, the conclusions they arrive at are necessarily truncated and their models and theories insufficiently thought through and developed. The reason for the left-handedness of language is a case in point. The best that current CogSci can come up with is as follows: since evolution seems to favour functionally specialised brain 'modules', it has done so with language, locating it in the left perisylvian area as much by random choice as any patently logical imperative. That this explanation is inadequate at best is shown by asking why the human population doesn't contain roughly equinumerous left-located-language and right-located language cohorts. Compare this fall-short attempt with the success of the TDE at explaining with almost complete satisfaction why both Broca's and Wernicke's streams are overwhelmingly left-located. The TDE was a product of Specialist-Generalist thinking, ie cultivating and refining specialist mastery of a generalist theory of all governable (ie goal-directed, purpose-oriented) systems, as opposed to the incremental, often incidental general increase in overall specialist understanding that occurs in all academics as they progress through their career milestones. This then is the (rather poorly explained) reason why I succeeded where everyone else failed.

AI in its many guises represents business as usual, industrially and historically speaking. Inventing AI is not so much like the invention of the computer, rather, it is much more akin to the invention of atomic energy. It is 19th Century maths that unleashed the power of the atom in the 20th Century, and it is 20th Century computation which will unleash the power of the mind in this, the 21st Century. This process has already begun, of course, with the weaponisation of drones, and the use of mini AI's in each phone (eg Siri, Cortana). We should not be so surprised to find that the best intuitive answer to the question, 'how can a non-living machine think?' is 'its the software, stupid!'. Since 2008, I have been researching the topic of so-called strong-AI on a full-time basis, using my qualifications and skills as an engineer and scientist to the full. Over the next few years, I found a 'conventional' solution to the problem that was computational[1]. Once I felt I had addressed the objective AI checklist items (on Gamez's scale of machine consciousness, grades MC1, MC2, and MC3), I then tackled the real thing, constructing a design for synthetic peer consciousnesses (Gamez MC4). In 2017, I added the final specification for the phenomenological 'kernel'. This is the last step in the design, and marks the start of prototyping.

2.1.1 Lets go directly to the most controversial part of my research, and look at some fundamental philosophy, to wit, the analysis of Consciousness and Intentionality[14]. This Cambridge University publication is a comparatively brief (50 pages) but thorough overview of current opinion in the field. However, as cogent and thorough this is as a scholarly analysis, there is zero (creative, individual) synthesis contained with in its content. This is not surprising, since Philosophers are usually not also Engineers. Creativity, invention, discovery is the very essence of great cognitive science (for example, Professor Bernard Baars' [16] doing individual research and inventing the Global Workspace as a model of (access) consciousness[16]. The core innovation of my research is the suggestion that the introspective (phenomenological) space we all possess 'inside our heads' (sic) has two orthogonal components, consciousness (awareness, reflexivity, intentionality) and volition (conation, conatus), and that these essentially Cartesian ('independent', mutually normal) consitituents are derived naturally from the only two possible modes of information transfer, ie input and output. The way that I have constructed the phenomenological space is to strip everything back to basic mechanistic analogies, constructed in front of the reader's very eyes, if you will. For example, stand in front of a mirror, and look directly into your own eyes. It is best to choose one or the other, but looking in the middle will work for some. Now actively 'flick' your eyeballs left and right, using your voluntary control of the eyeball muscles. What happens to your eyeballs? They don't move at all! This is proof that what you are conscious of and what you can voluntarily control are two separate things. It is this experiment, as well as Libet'sParadox, that led to the proposal that subjective space consists of two constituents.

2.1.2 Computer memory is, in its simplest form, a bunch of n-ary switches, where n is almost always 2. In my formulation of robotic systems, I use n-position rotary switches, which are in essence rotating levers with sensors 'thresholded' to fire at certain setpoint angles theta1, theta2, etc. The concept of thresholding is not peripheral at all. Far from it! I use thresholded sensors as a physical implementation of a neobehaviourist 'stimulus'. Some readers will immediately realise that the idea of a thresholded sensor, or stimulus detector, describes almost every type of idealised neuron (called, generically, neurodes, to distinguish them from real neurons). To me, this seemed kind of obvious, but I could not find this direct comparison anywhere in the literature. Warren McCulloch is one of the only exceptions I know of to this rule. The reader is encouraged to, specifically, read his famous paper, but also generally, to look at papers written decades ago, when the problems were clearer and more tractable, and the number of red herrings to be discounted were enumerable.

2.1.2 The task I faced was to think of how to use complex arrangements of simple n-ary switches to model/control the real world, or some simile thereof. Before we continue, non-engineer readers are reminded that modelling and controlling are the off-line and on-line forms of the same basic mathematical activity. This fact is already well known to experienced engineers. To engineers (applied physicists) and other flavours of applied mathematicians, the philosophical wars of the last 200 years, not to mention the digital computer revolution of the last 50 years might never have happened- their minds have always treated the world (including people's bodies and minds) as inherently computable. All computers did was make it easier to do big fun stuff, faster and faster. OK, lets return to large banks of these basic switches (called 'memory') and the control logic devices (microprocessors) that make one or more of them switch their current values when we want them to, THATS THE HARDWARE.

2.1.3 The hardware building block is the switch (or lever), which is a pluripotent unit. Think of the variable or function f(m,t). This means it has several (pluri-) meaningful/powerful STATES (memory as switching levels) or POSITIONS (memory as lever angles). The software can now be described as the set of current, or proposed, or historical positions of the switches or levers inside the hardware. The software building block is UNIPOTENT, think of the specific value f(m,t)= x1, or if you prefer set theory to functions, think of software as tracking which element in a set has been chosen w.r.t. time. That is why the hardware just sits there, looking, well, 'hard' while the software dances and cavorts and chatters over screens and printers echoing the patterns stored or 'cached' in 'scratch' or temporary memory buffers. In neobehaviourist terms, we must introduce another concept, the response, to adequately model equivalences between software and biological processes. While stimuli are thresholded, that is, their default state is 'off', the default state of responses is 'on'. If a response unit is connected into a quasi-biological circuit, it will continue to fire with a frequency whose value depends on the individual response. In cybernetic terms, stimuli are used to model unitised 'feedback' , or control information, while responses are used to model unitised 'feedforward', or command data. Again, I looked everywhere for these essential equivalence relations between neobehaviourism and cybernetics, but I could not find them. I had to work them out for myself, just as I did for subjectivity. Later I found that my model of subjectivity contained concepts that matched the umwelt, umbegung, and innenwelt, discovered by the Estonian biosemiotician, Jakob Von Uexkull. My labors were not wasted. I cannot imagine anyone developing a viable theory of biological intelligence (BI) without constructing sets of functional equivalences between the overlapping worlds of psychology, engineering, linguistics and philosophy. This was the most time consuming task in my research project, yet the most necessary, for from these efforts emerged the multi-disciplinary tools I needed to integrate my findings.

2.1.4 If we describe hardware and software in the kinds of circular definitions beloved by 'druids' of all kinds, folk who really don't want anyone to understand their trade secrets, then no one will ever believe that a computer can successfully mimic biology at all. However, if we describe computers using 'by inspection' truths, which rely on transparent, mechanistic, non-jargonese arguments as I have just used to describe HARDWARE and SOFTWARE, it becomes much easier to imagine both brain and mind falling within these descriptive, yet rigorous categories. I needn't have even used the switching analogy. Another equivalent analogy is that the hardware is the gears and catchment of a clockwork timepiece, while the software is the hands moving across the clockface. HARDWARE CONTAINS/RECORDS CHANGE, it encapsulates it as a repeating pattern, it is a physical embodiment of a constituent function or set, like computer memories. However, SOFTWARE RELEASES/EXPRESSES CHANGE, it articulates it, like computer displays.

How do I know that I am right?

2.2 Explanation of the highly detailed kind that appears in this discussion always involves reduction of some sort. The researcher claims that, by discovering principle P in system C, unknown complex system behavior C(P) is rendered no more mysterious than known simpler system operation S(P). In this case, C is the human cognitive system (self, mind and brain), S is the digital computer and P is the property of 'meta-mechanism', or 'software'. In prosecuting the reductionist agenda, I have followed reverse-engineering best practice and used a set of well worn principles. These are -

2.2.1 Occam's Razor - the simplest mechanism that explains the data is more likely to be the right one. This is the first law of the <expletive> obvious. It tells us that, above all else, only make new stuff up if you are forced to, ie if you really have exhausted all of the more straight-forward options. Thomas Nagel is an american philosopher of mind, who (in)famously posed the question 'what is it (really) like to be a bat?'. Consider the following statement he made in his 1998 paper about the mind-body problem [6]- "...the powerful appearance of contingency in the relation between the functioning of the physical organism and the conscious mind ... must be an illusion." Why in heaven's sake would someone deliberately make such a statement, except of course to deliberately provoke me! If there is a law that is made by <he/she/it whose existence I vehemently deny>[7], then surely it is Occam's Razor. If the link between the 'physical organism' (ie the brain, controlling the body, I guess..) and the 'conscious mind' seems powerfully contingent, then Occam would say that this link is cause and effect. Its that simple. Nagel's early (1990's) statements also run foul of what I call Holme's Shroud, the second law of common sense

2.2.2 Pierce's Arrow- the conventional term for this common sense reasoning is 'abduction', first attributed to C. S. Pierce. To non-scientists this term has an unfortunate homophone that means 'kidnapping', hence my suggestion of using a dynamic iconograph of a loosed arrow flying inexorably toward its 'bull' to indicate an implicit sense of 'fait accompli' ('done deal' in the US). It is the very transience of the skillfully loosed arrow which underlines the non-negotiable permanence of the target's fate. It is the shaft's flight that compels the commonsense imagination to pierce the target, without needing to wait for the arrowhead's 'unremarkable' landing (note the use of the word 'unremarkable'- if I suggest that the brain is a computer, basically hardware made from neurons, then I am setting the stage for the expectation of something 'fluid and showy' like software. The next step, that of suggesting that 'mind' is 'software' is meant to be almost obvious, 'unremarkable', implicit in the act of making the original choice. (Note- some older texts use the term Pierce's Arrow to denote the'|' symbol).

2.2.3 Holmes Shroud - if you have eliminated the impossible (covered the 'truth-corpses', or fallacies/untruths, with mortuary shrouds) then whatever remains, however improbable (ie corpse-like), MUST BE TRUE.

How do you know that I am right?

2.3 Now we get to the real nub of this first chapter- proving that mind- what is happening in my mind as I write this, and what is also happening in your mind as you read this- can be reduced to biomimetic machinery. How do you know that I am right? Put quite simply, you can't. What I can promise is that I have endeavoured to get as many of my logistical and epistemic ducks in a row.

www.psychologytoday.com/blog/mindmelding/201207/ten-tests-theories-consciousness

The website above contains an article called 'ten tests for theories of consciousness' by Dr. William Hirstein. While Psychology Today is not a particularly academic source, I do regard Hirstein's Top Ten as worthy of mention. It seems to me they cover the basics of so-called 'access' consciousness quite well. Here they are-

2.3.1. Relate consciousness to mind. In this first bullet point item, Hirstein asks whether unconscious or 'hidden' states of mind can be said to exist, and if so, how . What is the relationship between 'visible' and 'invisible' cognition (a.k.a. 'cogitation', 'mentation', 'thinking'...). In my systemology, thinking is that mental process whose output is either decisions or actions, or usually both, a hybrid form we customarily call 'behaviour'. Thinking is usually 'about' or directed toward the current, or germane situation/s, that is, the decisions/actions relate to this target situation/s in a causal/motivational manner. The behaviour called 'driving my car' usually involves (i) navigation towards a destination (ii) avoiding collisions (iii) obeying road laws. If the destination or route is a novel or unfamiliar one, the navigation component is made consciously, as shown by some of the constituent steps being made slowly and 'deliberately', involving high allocation percentage of available attentional resources, and often involving some 'backtracking'. However, if the route and traffic conditions are well known, the opposite is usually true, with almost no attentional (ie consisting of 'loops' of conscious and voluntary target acquisition, resetting and reaquisition) resources deployed. It is very difficult to not apply Occam's Razor in this situation, and posit that the 'obvious' or 'simplest' mechanism is a conceptually straightforward computation in which cognitive processing depends on availability of correct input information. In novel situations, the correct computational inputs are not stored in memory, and must be acquired in real-time (hence the slower speed, intermittent decision making, and occasional backtracking steps) via 'conscious' thought. In repeated, familiar situations, the correct computational inputs are stored in memory, and can be acquired easily via 'unconscious' thought, freeing attentional resources for other, higher priority tasks. The following observations seem compelling- (a) the use of the words 'conscious' and 'unconscious' seems rather mistaken, because it is not sensory awareness that is the primary switched resource, but motor control, ie volition. Instead of 'conscious' we might profitably replace it with the more technically precise term, 'controlled'. Similarly, instead of 'unconscious', we might use the preferred and technically more precise term 'automatic'. This is precisely the semantic substitution chosen by Schneider & Schiffrin (1977), in their much cited, 'seminal' paper, 'Controlled and automatic human information processing'. In this classic 'match to sample' experiment, they found that controlled (voluntary) processing is load dependent, suggesting a serial search process is involved. Conversely, for search targets that were part of a familiar, ie memorised set, they found that automatic (involuntary) processing is load independent, suggesting a parallel search process is involved. Treisman and Riley (1969) found that whether information presented on a non-attended channel is processed depends on information type[8]. SS77 is a problem for those people who have convinced themselves that the brain is more than a computer, since it proves that a common (some might almost say, universal) mode of thought is patently computational.

My unique approach suggests a conception of subjectivity (a.k.a. phenomenology -how I hate that term!) in which our 'conscious/unconscious' (used in the vernacular way) state consists of two orthogonal ('cartesian'?) components, one derived from the efferent stream, the other derived from the afferent stream of information. The consciousness component arises from thresholding of the sensory inputs, while the volition component arises from a similar binary thresholding of the motor channel outputs. In my scheme, 4 CxV combinations (C*V, C*I, U*V, U*I) are possible. For example, Libet's so-called paradox (I call it Libet's Misunderstanding) is easily accounted for once the concept of consciousness C (and its complement U) is separated from the concept of volition V (and its complement I), permitting the use of the U*V subtype to explain the real nature of the apparent inversion of neural time. In other words, voluntary acts are possible before one becomes conscious of them.

2.3.2. Relate consciousness to perception. This depends on how you define perception. In my theory, mind (the second circle in Figure 1) manages behaviour by cybernetic control of perception. Precise use of the terminology and associated definitions is critical to any really complex scientific theory.

2.3.3. Relate consciousness to dreams. The geometrical space which hosts both consciousness and dreams is provided by the cerebellum. The old idea that the cerebellum is just a motion coprocessor is just that- an old idea. In terms of Figure 1, this 'dream theater' is the P-lobe (the light green circle) of the level 3 TDE, the one that generates the sense of self, and of one's lived experience. Dreams are a part of sleep. Sleep is a specialised mode of unconsciousness whose purpose is twofold- (1) consolidation of declarative novelty- convert recently acquired episodic memories into semantic (general knowledge/ world state) form (2) consolidation of procedural novelty - convert recently acquired skills into 'muscle memory'. Dreams usually occur in REM sleep, after the last of three preceding NREM phases has concluded. In REM sleep the skills acquired are 'compiled down' into procedural form. Since this form is the same common-code as motor commands, the body is paralysed during REM sleep. Hirstein fails to mention the most important feature of thought, namely that it is linguistic, so that consciousness has the same narrative structure as the sentences in a novel! (from Daniel Dennett in 'Consciousness Explained'). Language has the formal structure of predicate (higher order) logic statements, which are resistant to 'frame problem' issues, by virtue of having free variables which allow anaphora and other features essential to linguistic narrative construction to be fixed by late binding. However, computation needs to have a propositional (zeroth order) logical form to be efficient enough to support real-time consciousness. Hence during sleep, higher order logic (the kind used by semantic knowledge) is 'compiled' into zeroth order logic. This is precisely why computer software is compiled, thus pointing out one of the many technical features that brains and computers have in common with one another.

2.3.4. Relate consciousness to the self. The self is the third circle in the TDE-R fractal (figure 1). While the self and the mind are both subjective, it is the self which integrates memory into a unique conceptual perspective. While level 2 has subjective awareness, level 3 has self-consciousness, proper.

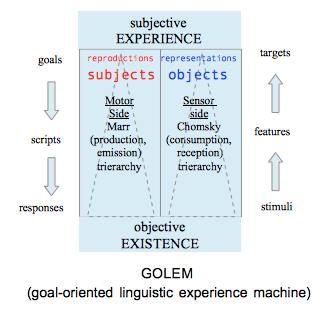

2.3.5. Relate consciousness to representation. In the GOLEM diagram, the bottom-up information hierarchy of the sensory channel is formally defined as creating representations (meaning-bearing structures). When those bottom-up processes produce one unique object that is the focus of attention spotlight, they are serially organised, and are thus defined as conscious.

2.3.6. Relate consciousness to the brain. The brain's neural ROM circuits form power sets (sets of sets), and it is this ability to take neural maps and produce meaningful submaps which gives rise to all of the mind's amazing capabilities, including consciousness. One fact that is not widely known is that the brain is a ROM. This fact should be added to Hirsteins's hit list.

2.3.7. Explain what the function of consciousness is. Consciousness doesn't really have one function. Rather, it is a result of thresholding the sensory inputs, so that attentional resources are focussed on the most relevant incoming perceptual features, and we don't (metaphorically) drown in an ocean of info-chaos. Consciousness is a semantic salience filter based on the twin criteria of relevance and novelty.

2.3.8. Provide an account of the explanatory gap. I like to explain the difference between brain and mind as analogous to the difference between hardware and executing software processes. This analogy is the 'right kind' of obvious (see 'Pierce's Arrow' above).

2.3.9. Provide an account of error for the other major theories. This one is easy. Since there are no other major theories of human cognition, there is no other account to explain. I am the only person, correct or incorrect, who has managed to join all the dots with a plausible scientific explanatory scheme. Admittedly, there are human cognition software programs such as Clarion, SOAR, ACT-R which claim to simulate one or more aspects of human cognition. The three systems mentioned here even offer simulated BOLD plots. That is, the user defines the cognitive task in an appropriate parametric format, and the program outputs a typical MRI scan. However, to the very best of my knowledge, except for myself, no one to date has constructed a scientific explanation for all known aspects of human cognition.

2.3.10 Relate your account to the history of the study of consciousness. I think I have provided my readers with a rich and detailed account of how and where my theory fits in with the efforts of others, such as Kant, Uexkull, Heidegger, Weiner, Marr, Skinner, Chomsky, etc. Please look at my other websites. Note that until recently, it wasn't wise for an ambitious student to admit to studying consciousness. Instead, euphemistic topic headings were used. For example, in a pioneering paper by Schneider & Schiffrin (1977), the authors use 'controlled' instead of 'voluntary ', and 'automatic' instead of involuntary. Tulving himself used the somewhat cryptic terms noetic, anoetic and autonoetic to describe states of consciousness that accompany the three main knowledge sub-types. Hopefully, in future work around this topic, euphemisms like these will no longer be needed.

2.4 .1 My research started with much more modest aims- I found a rather 'obvious' solution to Libet's Paradox (LP). The 'obvious' part (which was not, as it happened, very obvious at all) is understanding that Consciousness and Volition are two sides to the same coin. Here is (briefly) how I did it. Firstly, one needs to understand exactly what idea lies behind the concept of consciousness- this idea is the (binary) thresholding of a system's input channel. Inputs that exceed a given threshold are significant enough to be attended to consciously, those that fail to exceed that value are not. The next idea, which immediately follows from the last one, is this- if an importance threshold is applied to the input channel, then surely one should be applied to the motor channel too, if only for the sake of a symmetrical system design. This second step then results in the creation of Voluntary (V) and Involuntary(I) cognitive states. Combining these new states with consciousness (C) and unconsciousness (U) results in four new hybrid cognitive states. One of these new states, VxU (unconscious voluntary state) provides a solution to LP. More importantly, however, these four new states provide an agent with a means of characterising automatic and autonomic motor states, as well as proximal(self) and distal(other) conscious states. The end product of all these ideas is the TDE canonical computational pattern.

2.4.2 At this point in the research process, I developed a significant amount of self-doubt. The TDE seemed a good idea, but was it a true one, that is, was it real. I then found that some parts of the TDE seemed to describe hardware level features, and other parts seemed more to resemble software. I then created the TDE-R, a recursive version of the TDE, which resolved the empirical threat. Best of all, TDE-R had two agreements with real psychological data (A) TDE-R predicted an asymmetrical distribution of cognitive function , (B) TDE-R suggested higher order analogs of the four basic cerebral lobe types (F lobe = frontal/motor, P lobe = parietal/sensor, L lobe = limbic/emotional, T lobe = temporal/semantic memory) that matched a variant of Endel Tulving's widely accepted distribution of knowledge subtypes (ie episodic knowledge in LCH, semantic knowledge in RCH, conative knowledge in limbic system, procedural knowledge in cerebellum/basal ganglia. Please refer to my other websites for a detailed description of my early discoveries.

Decoding the TDE-R master fractal (figure 1)

2.5.1 The first task is to code the operating system software, which is called the Tricyclic Differential Engine - Recursive. TDE-R is TGM's equivalent to Mac-OSX or Microsoft Windows NT/7/10. If, like me, you did little or no recursive programming as an undergraduate, you should do the professional thing, and spend a day or two struggling at undergrad level with the basics of recursion. Most modern HLL's (such as all the 'C' dialects) allow recursion, but for this project, you will need to be more than merely very good at it- nothing less than outstanding will cut the mustard, I'm afraid. This project may represent your hardest coding challenge to date, certainly it was mine! Therefore, why not think outside the box, for example, spend an hour or two recursively scripting windows batch files, and also revise your rusty Unix' 'fork' and 'join' operators. The end goal, of course, is to invent a canonical form that is minimal yet able to grow into the highest scale of magnitude (the third level) in figure 1, the level of the 'self'. Got to walk like an Egyptian.

What exactly is software anyway?

2.5.2 After doing 3 or 4 years of undergraduate computer science, and then either getting work in the industry or staying at uni a bit longer to get honours etc, (as I did) most of us would be reluctant to ask ourselves this question. We might respond somewhat indignantly by insisting that, of course I know what software is. In this research project, however, our usual obfuscation will be inadequate and unwelcome. We need to develop a shared, crystal clear idea of what software is, what it does, how and why. While a more complete explanation is included in www.cybercognition.., the short version follows. People are familiar with the idea of a mechanism to generate a motion, copy a shape, etc. All we need to do with this definition is to then imagine another mechanism, a meta-mechanism, if you will, whose allotted task is to model the same situations as those simulated by the first one. Actually, thats not exactly right. Each mechanism in this chain of simulacra should be able to 'envelope' the simulation capacity of its adjacent links. So-called 'hardware' contains an incredibly capable modelling mechanism, digital memory. This is basically just a field of bytes, or 'words' of a certain size, eg 32 or 64 bit 'width' or 'length'.../cont'd

ASIDE: it doesn't matter about the words, as long as (a) you are sure what they mean (b) you draw some kind of diagram describing the core semantics or meaning of your understanding. Diagrams are a kind of 'lingua franca' which (a) use visual/graphic concepts, eg shapes, lines and simple text labels(semantic 'anchors') to indicate basic connections between concepts (b) allow your insight, discoveries, hard work to be expressed in a way that is agnostic of social and scientific speciality boundaries. All the great genii use diagrams, indeed, were observed from an early age to be graphically focussed. The answer is simple- diagrams are much less ambiguous than words! The other side of this coin is also worthy of analysis- the highly ambiguous nature of almost any sentence you choose is itself a feature of my cognitive model. GOLEM variables are deterministic, they are declaratively not procedurally defined. For example, a function X and a variable X are equivalent - they are both constructed from a set of deterministically collected exemplars, adjacent points on a neural ROM, or fixed data map.

cont'd/....The different number of data types the memory 'words' can express is far greater than the ones we actually use. In fact, we only use one (global compound=fractal) data type, the file hierarchy. When we get a new usb stick or hard drive, we ask 'how is it formatted'? That is, does it have one of a limited set of quasi-proprietary (ie platform-based) hierarchical file system, eg NTFS, HFS, DOS etc. We don't know or care what is in the files before we start the modelling (ie data storage/ computation) process. Consequently, there are two sources of flexibility in the basic formatted digital memory (FDM) (a) the contents of the files (which can of course contain as many more meta-mechanisms as required, consistent with overall capacity constraints and labelling conventions), and (b) the depth and breadth (ie branching factors) of the file system trees.

2.5.3 In other words, the structure of FDM doesn't constrain the modelling very much at all. The user can use any shape of file system tree, and populate it with any mixture of file types. Most important of all, this means that all FDM's are FRACTAL, just like our brains and minds. This makes the task of building biomimetic AI (=BI) much easier.

**It suggests that, with a change in software (eg internet download), every computer could potentially be made into a new animal or human mind.** BRAIN-COMPUTER EQUIVALENCE

The general similarity between brains and computers has been widely accepted for some time. However, GOLEM theory strongly suggests this similarity to be an identity at the 'firmware' or 'platform' level. It is easier from now on to accept the truth of pan-fractalism, namely, that ALL important systems, natural or artificial are fractal. That is, all human products and activities are every bit as much a part of nature as a leaf or a worm or a glacier, or for that matter, a galaxy. We should therefore, by this argument, cease to use the word 'nature', since one of its main semantic duties is to conceptually separate human-made objects and operations from everything else that we experience as real[3]. We still use the word 'nature' because of its other (and arguably, far more important) semantic duty, which is to promote environmental and ecological conservation.

2.6.1 xxxxxx

Set membership and its significance in the GOLEM simulation

2.7.1 One of the main barriers to wider acceptance of GOLEM is the way it uses finite recursive sets to create hierarchical data structures. If a name N1 is attached to a group of three other data entities E1, E2 and E3, then (a) N1 is a finite set with elements E1, E2, and E3 (b) N1 can also be interpreted as a 'parent' node in a hierarchy such as a file system tree, and E1, E2, E3 can be interpreted as its 'children' or 'leaves'. Many mathematicians regard sets and trees as synonyms for the same underlying concepts. This is an empowering idea, since it allows us to view any system which can be recursively divided into sub-systems as a hierarchy (see figure 5). Note that the naming scheme used here, that is, E1...En, gives the wrong assumption that the entities are ranked or ordered in some way. Sets are defined as containing distinct, unique elements. That is all. Sets contain symbols, which are unique by definition, not tokens, which are copies of symbols, again by definition. Convenient mnemonic: Paper money notes or e-money are symbols (distinct serial numbers, or prime numbers) compared to coins, which are tokens.

2.7.2 The portrayal of a set of elements is not just a low level computational element- if the elements are logical propositions (eg facts in a knowledge base eg the right cerebral hemisphere in Tulving's scheme) then this 'simple' collection can act as a logic programming construct called a Horn clause- (p ^ q ^ ...^ t) --> u, which can be interpreted as "to show u, show p and show q and ...and show t". Solving such 'goal clauses' is P-complete, where the class P contains all problems considered 'tractable' on a sequential computer. Consider the lowest level of GOLEM, which contains the primitive elements si and ri, that is, the ith stimulus and the ith response.

2.7.3 Normally, we think of dendrites (the post-synaptic part of the interface between neurons) as performing arithmetic operations which resemble floating-point or real-valued numbers. It is, however, theoretically possible for a single neuron to implement logical, binary (boolean) functions using the individual axo-dendritic inputs, if we limit the range of values each input can adopt. Consider a neuron which has n 'normalised' inputs, that is, they each have binary signal strengths in the range [0..1], that is, they are either completely ON or completely OFF. If the (ganged) threshold value Tg = n * (1) = n, then the synapse effectively implements logical 'AND'ing of the inputs within a single time slice, data sample window or saccade. That is, only IFevery input line at time 't' is at maximum value '1', THEN the neuron will fire, ELSE not. This numerical 'kludge' is equivalent to a 'Horn clause', also known as 'backward chaining', the logical operation which underpins the so-called '5th generation' logic programming language Prolog.. Therefore, in theory, GOLEM can be programmed to perform logic programming algorithms. Now consider the complementary situation- the threshold value is now set to T =1. In this case, only one 'high' (or 'ON') input line is needed to cause the neuron to fire, thereby implementing XOR, or exclusive 'or'. In practice, the first input line to 'go high' will cause the neuron to fire, effectively ignoring the other (n-1) input values, due to the neuron's refractory period which immediately follows each discharge event.

The importance of understanding what meaning is to a machine

2.8.1 One of the most important findings for scientific research (that is, creating and developing true theories of reality) is Pierce's triadic theory of meaning (he called it semiotics, or semiosis). It is triadic, and not dyadic like Ferdinand Saussure's conception, because in addition to Saussure's standard ideas of language form and function, Pierce introduced a third, recursive, element, that of hierarchical , or generative context. This brings us to the modern conception of meaning, which is roughly equivalent to hierarchical context dependency. These relationships, which were discovered/invented many years previously by other people, are for the first time, made clear and unambiguous by GOLEM theory. Meaning is created in the sensor channel, via the hierarchical bottom-up reconstruction of representations, using a convergent paradigm. That is, constituents 'come together' to make new meaning sets. Conversely, message form (syntax) is produced in the motor channel via the top-down hierarchical (recursive) creation of reproductions, using a divergent paradigm. Meaning is split like white light through a prism into a color spectrum, namely the message constituents. That is, the dependents 'split apart' to make new message elements.

2.8.2 The evolutionary step of becoming laterally symmetric corresponds structurally to the joining together in the DNA of gene code for two mirror image organisms, which now form left and right halves of the same individual. The result of this operation on cognition is quite profound. The single brains which each have a sensor and a motor channel (each with two thresholded states) therefore each have 4 possible states, {Conscious, Unconscious, Voluntary, Involuntary }. However, when they are rotated through 90 degrees, and then combined, the new brain has not 8 but only 4 distinct compound states, {C*V, C*I, U*V, U*I}. When a GOLEM is constructed for the combined, laterally symmetrical, global dual brain, this GOLEM has two new global processes, animation, and automation, as in figure 7.

2.9.1 xxxx

2.10

2.11 Searle's Chinese Room (SCR) is a thought experiment which at first blush seems deeply flawed in design. One's first impression is not good - just exactly how can a famous professional philosopher like Searle construct such a prima facie flawed thought experiment? John Searle (or me, or any other subjectively-conferred self) receives input sentences from the 'outside world' , all written in Chinese, a language neither he nor I (nor, presumably, you) understands. He has been thoughtfully provided with a 'user manual' for the chinese (or mumboese, or whatever...) foreign language, constructed as a lookup table of matching pairs of sentences X → X'. When Searle (or you, or me) receives a sentence X(j) though the slot in the door, we look up the matching reply sentence X'(j) in the user manual, write it down on a piece of paper, and push our reply out through the slot. Presumably, the FLS (foreign language speaking) person or people near the slot in the outside world, the same ones who initially input the sentence X(j), will read the reply X'(j) and understand it. What is wrong with this situation? The most serious objection is that the whole set-up lacks semantics, or meaning. Meaning is a two-component function. One can only know the meaning if one can (i) read the message ,and one has access to (ii) its full, recursive context. This three-parameter (symbol, referent, context) function is the main discovery of C.S.Pierce, and it supersedes that of Ferdinand Saussure who posited that meaning was a two parameter (symbol, referent) function. In fact, the objection above is not so serious, because both the chinese people outside the room, and John Searle inside the room share many if not most features of their informational context. Each of them know the experiential meanings of common and uncommon human situations. So it is not that Searle is unable to understand what they mean, it is just that he is unable to gain precise access to it. He just doesn't know Chinese/Martian/whatever syntax for the situations which he does know the English syntax for. If SCR makes us aware of one factor above all others, it is semantics, and the key role it plays 'behind the scenes' in all intelligent cognitive behaviour.

2.12 Nagel's 'What is it like to be a bat' is American philosopher Thomas Nagel's exemplary method of demonstrating an objection to conventional views of AI. This example seems (to Nagel) to suggest that the subjective nature of consciousness undermines any attempt to explain consciousness via objective, reductionist means. I am not sure how Nagel arrived at his objection, although I am glad he did, because he provides an excellent opportunity to put my theory through its paces. In [6] Nagel reveals a viewpoint which does seem very much like a kind of two-way, duplex epiphenomenalism to me. This paper was written in 1998, and Nagel may well have updated his views since then.

My reasoning proceeds as follows- by examining the details of the GOLEM/TDE model common to both humans and bats, we can for the first time put ourselves in place of the bat. The GOLEM/TDE is an 'embodied, language of thought' model. Therefore, putting ourselves in the 'bat's shoes', if you will, is not impossible- instead, it is more like learning another (embodied) language, possible in theory, although perhaps impossible in practice. Both bats and humans share two levels of the three level TDE-R hierarchy (see Figure 1). The first TDE-R level, whose memory links are possibly formed during 'infant babbling' phase of mammalian development, maps body motions to proprioceptive sensations. The second TDE-R level maps goal-directed behaviours (implemented as spatial movements, as opposed to level 1 body motions, a distinction first recognised by 19th century neuroscientist John Hughlings-Jackson) to limb and torso positions. In terms of embodied computational linguistics, the behaviours represent complete 'sentences', which are 'effects', while the limb and torso positions represent a remappable 'alphabet' of 'causes'. Together, they form a complete and functional system of cause-effect mappings. At both level 1 and level 2, the reader should note that subjective variables are represented (proprioception, and current-goal cybernetic differentials- remember that the 'D' in TDE stands for 'differential'). The use of subjective variables in this way was first demonstrated in the 1930's by Jakob von Uexkull [7], from ideas and arguments first attributed to Emmanual Kant.

2.13 Chomsky's minimalist program is an explanation of observed linguistic behaviour which relies on the existence of certain syntactic transformations. Chomsky's approach has been criticised as excessively 'syntactic' but critics often fail to follow this up with necessary technical details. GOLEM/TDE theory provides one way of teasing out the requisite details. My theory began with the suggestion that instead of thinking about dependency and constituency as two competing grammar theories, why not apply them in a complementary manner (or, as my British father liked to say, 'horses for courses'). That is, use constituency (eg phrases etc) as a way of structuring efferent (motor channel, syntactic, output) activity, and dependency (eg subject-predicate patterns) as a way of structuring afferent (sensor channel, semantic, input) activity.

2.14.1 xxxxx

2.15.1 xxxxx

2.16.1 After graduating from Flinders University in South Australia (this author's alma mater), Rodney Brooks moved to the US, and made a name for himself at MIT, where he wrote a seminal paper[12] describing where he thought that AI had failed to live up to its early promise. In particular, he criticises the top-down approach, which he calls the Sense-Model-Plan-Act (SMPA) model. According to Brooks, AI has inherited this methodology primarily from the computer programming domain. Unfortunately, it does not 'scale up' from the simplistic 'blocks world' level to the degree of complexity and real-time demands of everyday robotic environments. Brooks is a very good engineer, and has made a new robotic exemplar to illustrate each new advance in his theory. As a result of this specific factor, as well as the high global visibility of all MIT research papers and associated activities in general, he has the 'world's ear'. Unfortunately, he has not taken as full advantage of this channel as he otherwise might have, if he had been able to effectively describe his proposed solution. Brook's main catch phrase is 'Intelligence without Representation'.

2.16.2 Cutting to the chase, what Brooks has failed to say is that the solution to the problem is PCT [13] or perceptual control theory[14]. Both this author, and Powers independently discovered PCT, but the first person to have done so is Jakob von Uexkull, back in the 1930's. Incredibly, although Brooks never mentions PCT in any form, he actually does mention Uexkull in a power point presentation[15], in connection with Uexkull's concept of Merkwelt. The real transformation that the use of PCT forces upon us is that of replacing objective viewpoints with the subjective stance. The Global databases ('world models') that Brooks so condemns are quintessentially objective, or viewpoint free. They assume complete access to information, clearly an unrealistic strategy in a dynamic world. In PCT/TDE, behaviour is based upon control of percept (eg a crosshair in a gunsight), which is a much more economical way of representing the data pertaining to robotic control and trajectory planning.

2.16.3 Like a good engineer, Brooks is much better at implementation. He describes in great detail a working methodology in which functional layers work in parallel and can be composed incrementally. Brooks calls this concept “subsumption architecture”. At every stage in the process, one has a working organism. Intelligence is built up incrementally, in a manner reminiscent of evolution. Thus, claims Brooks, subsumption is more likely to be 'biologically plausible'.

2.17.1 George Lakoff is an interesting guy. Recently (time of writing is 2017/18) Lakoff has a point of view which can be (not unfairly, I hope) summarised as 'the mind is the brain'. Our mind, claims Lakoff, is nothing more than the sum total of all the neural circuits we have formed ('forged by experience') during our lives. This idea is remarkably close to the idea that underpins the TDE's GOLEM - our minds are (fractally) constructed from intertwined pairs of subjectively and objectively formed neural circuits. I'll put a GOLEM representation here so you don't have to go mouse hunting all over the place! Below the GOLEM diagram, I've put a direct link to one of Lakoff's recent Youtube videos. This one is from 2015, I watched an almost identical one he made in 2014 and one of his UCB (Berkeley) lectures from 2016 which covered the same ideas. He has also been making comments about (President) Trump. My advice, should he choose to take it is the same as I gave to Chomsky - stick to what you know, Science, and leave politics for the cheats and liars and con-men. I'm sorry, but the older I get, the less I trust them. Enough said.

Lakoff's ideas about situated memory are not unlike those of mine, or of Grossberg. Unlike a digital computer, memory in the brain is not syntactic in nature, ie relocatable, but it very much situated within semantic units, each of which are embedded neural circuitry.

References

1. Dyer, M.C. (2012) Tricyclic Differential Engine-Recursive (TDE-R)- A bioplausible Turing Machine. B.Sc Honours Thesis, Flinders University of South Australia.

2. The descriptive progression goes from (i) the most mechanical robot, clockwork muppet, telepresence (remote control) drone, then (ii) mechanical with a little intelligent behaviour (Android), then (iii) semi-biological with peri-human levels of intelligence, behaviour and language (Cyborgs) to (iv) the ultrahuman

{Robot--> Android --> Cyborg --> Human --> Ultrahuman}. Q. Where do robots keep their crockery and cutlery? A. In the cyborg!

3. The idea, as well as the subsequent glossy book, is due to famous AI futurist theorizer, Ray Kurtzweil.

4. The machines can do the work! Capital!

5. The logician C.S.Pierce (so brilliant we still haven't discovered half the great ideas in his published and unpublished works) said of 'common-sense' or adducive reasoning, that it involved a guided guesswork process. The hypotheses so generated were tested to see if they generated qualitatively unremarkable results. If so, that hypothesis was accepted. An example is the mind-body problem. Computer software/hardware distinctions generate very similar comparisons to those raised by the mind-body problem. Hence the mind is 'unremarkably' explained by saying that it is a kind of computer software running on the brain (the 'hardware'). Even people who vehemently profess to believe in 'weird' philosophical stances show their 'real' belief system when they have a personal encounter with mental illness- their behaviour (a patent willingness to take, and implied faith in the efficacy of psychotropic and pharmaceutical chemicals, not to mention the professional advice of the prescribing doctor - both the use of the medical system and the ingestion of its most powerful therapeutic tool, ie drugs, indicates a strong adherence to the 'common sense' materialist/monist position.

6. Nagel, T. (1998) Conceiving the impossible and the mind-body problem. In my treatment of Nagel, I pull few punches, but don't for one moment think that I dismiss his famous 'bat' objection. He may have used an unusual, almost illogical route, but whatever way he got there, he did it. The TDE demonstrates the 'nuts and bolts' of semantic (ie symbolic) grounding. We can know how a bat feels if our 'mind' software is embodied by the same 'brain' firmware that a bat has. Basically, this biols(sic) down to a comparison of cerebellums. This simple comparison produces a very uncomfortable moment for most people - it demonstrates that not only do so-called 'lower' animals have almost identical basic emotion sets to humans, they probably have fairly similar mental processes when doing everyday things like eating, feeding baby, having sex with the 'missus', going out to work in the morning and coming home to the rook at night, etc. Hey, they are mammals like us. When we cut them, they bleed, when we hurt them, they feel pain. Their hormonal systems are almost identical, the anatomical distribution of their neurotransmitters certainly are, for all intents and purposes. Take a moment to consider this sad example of our rank hypocrisy:- we use lab rats (which are also rodents like bats) to test human pain relief medicines for efficacy as well as side effects, then we glibly claim that 'animals can't feel pain'.

7. Uexkull invented the terms umwelt, umbegung and innenwelt. This was the first effective labelling of the subjective variables alluded to by William James and Hughlings-Jackson.

8. Their findings reinforce the earlier result of Treisman and Riley (1969), who found that whether information presented on a non-attended channel is processed depends on information type. SS77 and other similar findings proved that Broadbent's model of an attention switching filter was incorrect.

9. Zwart, J-W (1997) The Minimalist Program - review article. J. Linguistics Cambridge University Press.

10. WOM = write-only memory, initially proposed as a joke, it is in fact a very serious category, equivalent to open-loop, feedforward computation.

11. ROM = read-only memory, distinguished from each other by (1) mean access time (2)method of erasing (if any).

12. Brooks, R. A. (1991) Intelligence without Reason. MIT-AI-memo 1293.

13. This author discovered the theory that underpins PCT independently of its inventor (William T. 'Bill' Powers). The abstract machine which implements this theory is also known as the Tricyclic Differential Engine (TDE), the name invented by Mr Dyer.

14. Stanford Encyclopedia of Philosophy (rev. 2016) Consciousness & Intentionality.

15. http://cosy.informatik.uni-bremen.de/sites/default/files/IntelliWoRepres.pdf

16. FYI, Baars suggests that consciousness has the structure of a shared global workspace, and that intentional items have a 'democratic' type of global access to the information content of both short-term (buffer) and consolidated (archive) memory.

17. Readers may or may not remember the difference- Special Relativity describes the events and situations which apply to inertial reference frames, ie those which are either stationary, or moving at constant velocity, without any measurable acceleration. General Relativity relaxes the constraints by analysing the same variables, but for non-inertial (ie accelerating or rotating) frames. Einstein brought several key innovations to the table. He invented an extremely compact way of depicting polynomial forms of row and column (covariant and contravariant) matrices, a step which minimised the unwieldiness of the strings of symbols he needed to shift around in his short-term, visuospatial memory. His key insight in General Relativity was that, since the velocity of all electromagnetic waves/particles is effectively constant (being equal to c, the in vacuo value of luminary velocity), and the distances involved are literally astronomical in magnitude, we are then justified in conceiving of our universe as being not a 3 dimensional space through which we move, but a 4 dimensional manifold , with the usual 3 spatial dimensions augmented by a fourth dimension, spatially equivalent to a constant scalar multiple of time, c(time), where the value of the constant, c = the speed of light.

Copyright (2016)- do not copy without attribution- Charles Dyer BE(Mech) BSc(Hons)